What is computer vision? (machine learning)

What is computer vision?

Computer vision (CV) is a field of machine learning and computer science that helps machines make sense of the world by recognising visual patterns and detecting objects, much as humans do.

The technology is a subfield of artificial intelligence.

To build computer-vision algorithms, engineers use both classical methods of machine learning and deep neural networks, including convolutional ones (CNNs).

When did computer vision emerge?

In the late 1960s pioneers of artificial intelligence began to discuss pattern recognition using computer algorithms more intensely. They believed that imitating the human visual system would endow robots with intelligent behaviour.

In 1966 they proposed connecting a camera to a computer and making the machine “describe what it sees”, but the technology of the time could not deliver.

Research in the 1970s laid early foundations for many of today’s algorithms, including edge detection, line labelling, motion estimation and more.

The following decade brought more rigorous mathematical analysis and quantitative methods.

By the late 1990s, closer interaction between computer graphics and computer vision spurred major advances, including image-based rendering, view interpolation and panorama stitching.

The decade also saw the first practical use of statistical learning methods for facial recognition.

In the early 21st century, feature-based methods made a comeback, combined with machine learning and complex optimisation frameworks. The real revolution arrived with deep learning, whose accuracy surpassed all existing approaches at the time.

In 2012, at the ImageNet competition the convolutional neural network AlexNet placed in the top five with an error rate of 15.3%. In 2015 a neural network won the competition. This is widely seen as the starting point of modern computer vision.

How does computer vision work?

The mission of computer vision is to teach machines to see and understand their surroundings using digital photos and video. Three components underpin this goal:

- image acquisition;

- information processing;

- data analysis.

Image acquisition turns the analogue world into a digital one. This can involve webcams, digital and DSLR cameras, professional 3D cameras and laser range finders.

Data gathered in this way must then be processed and analysed to extract value.

The next stage is low-level data processing. It detects edges, points and segments—simple geometric primitives in an image.

Processing typically relies on sophisticated mathematical algorithms. Popular low-level methods include:

- edge detection;

- segmentation;

- classification and object detection.

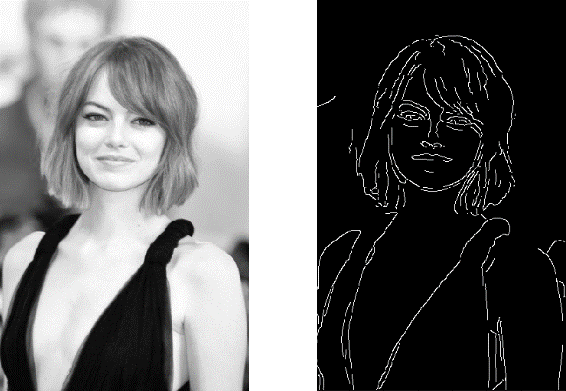

Edge detection encompasses a variety of mathematical methods aimed at identifying salient points in images. The algorithm analyses the picture and converts it into a set of curved segments and lines. This highlights the most important parts of an image and reduces the volume of data to process.

Segmentation is typically used to identify the locations of objects and their boundaries. The algorithm assigns a label to each pixel so they can later be grouped by certain characteristics.

The result is a set of segments covering all parts of the image or contours extracted from it.

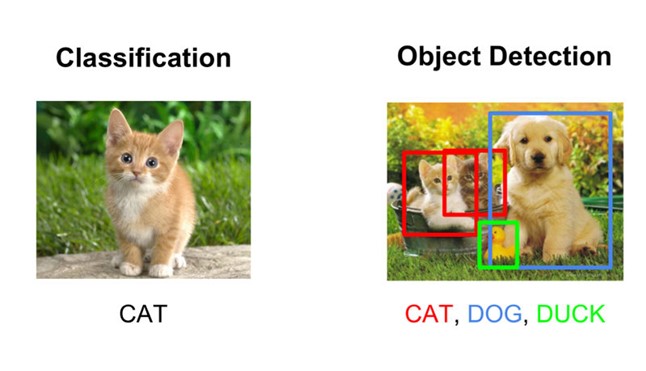

Image classification extracts information about image content. A common example is detecting whether there is a cat in a photo: the model analyses the data and tries to answer that question “yes” or “no”.

Classification underpins a more complex task—object detection. This makes it possible, for example, to distinguish a cat from a dog and other known objects in a single image.

Image analysis and understanding is the final step, enabling machines to make decisions. It uses high-level data obtained from the previous stage. Examples include 3D scene reconstruction, recognition and object tracking.

Where is computer vision used?

Computer-vision methods are now used across many fields.

Security

Applications process surveillance-camera streams in real time, recognise objects, detect intrusions into restricted areas, admit vehicles automatically by licence plate, and much more.

Facial recognition

The technology is widely used to authenticate users, from granting access to secure sites to unlocking smartphones.

Recently such systems have drawn criticism from some rights groups and politicians. They argue that the spread of facial recognition threatens civil rights and freedoms and that the technology should be limited.

Self-driving cars

A suite of cameras and algorithms lets a robocar navigate, distinguish moving from static objects and react to their sudden appearance. Today many carmakers, including GM, Toyota, BMW and others, are working on fully autonomous transport.

Tesla has made notable progress with its Autopilot and Full Self-Driving driver-assistance software. It enables a car to regulate speed, recognise traffic lights, road signs and other vehicles, turn at intersections and change lanes. Driver input is not required, but a driver must be present behind the wheel.

Robotics

Much like in autonomous driving, computer vision helps robots navigate, identify objects and obstacles, and interact with people and things.

There is no universal algorithm that allows smart devices to see and understand any environment. Each robot is trained to perform its specific task.

Augmented reality

AR technologies rely on computer vision to recognise real-world objects. This helps determine surfaces and their dimensions so that 3D models can be placed correctly.

For example, in 2017 IKEA released an app that lets users see how furniture would look in a room through augmented reality. A virtual copy of an item can be viewed from all sides at true-to-life scale.

Motion and gesture recognition

Computer-vision algorithms are used in film production, video games, analysing shopping-behaviour patterns, sports-performance analysis and more.

Image restoration and processing

The technology is used to restore old images, colourise black-and-white photos, upscale videos to 4K and raise rendering resolution in video games.

What are the challenges in computer vision?

Developers face a number of hurdles. One is a lack of source data.

Despite the spread and falling cost of photo and video equipment, data scientists do not always have enough material to train algorithms. Legal restrictions, ethical considerations and geographic barriers can all play a part.

For example, a developer of an algorithm to recognise crop types in farm fields may struggle to collect the necessary photo and video materials to train a high-accuracy model, and end up relying on open sources or third parties.

This leads to another problem: poor-quality training data. This includes low-resolution imagery and dataset errors that strongly affect results.

Data labelling is complex, lengthy and monotonous manual work. People make mistakes, so datasets often contain incorrect labels, partially annotated objects and other artefacts.

In April 2021, researchers at the Massachusetts Institute of Technology found that 5.8% of images in ImageNet—one of the most popular test datasets—were mislabelled. Common mistakes included wrong object names: a mushroom marked as a spoon, or a frog as a cat.

Such slips in test datasets affect the performance of machine-learning algorithms. The researchers urged AI developers to practise better data hygiene when building models.

Another constraint is computing power. Processing large volumes of media requires expensive, powerful hardware. Cloud services help, but transmitting huge amounts of data demands stable broadband, especially for real-time video.

Edge computing may help. This paradigm processes data where they are collected. Computations can be done on single-board computers such as Raspberry Pi or Nvidia Jetson, as well as on cameras equipped with processors and AI algorithms.

With edge devices, only high-level data are sent to a central server, enabling analytics tools to draw conclusions.

Even so, the concept is far from fully realised: despite the low cost of single-board computers, they still lack the power to handle large data volumes, especially real-time video.

What are the trends in computer vision?

One major direction is generative adversarial networks (GANs). Lately these algorithms have been used not only to stylise photos and videos as paintings by famous artists, but also to create convincing fakes.

For example, the project This Person Does not Exist uses GANs to generate photorealistic images of people who do not exist. Similar projects include an algorithm for fake cats, This Cat Does not Exist, and for sneakers—This Sneaker Does not Exist.

Such algorithms let researchers and developers create synthetic datasets for training models. These datasets are easier to assemble and address some legal and ethical issues around image use.

Startups generating synthetic data are already putting this into practice. In October 2021, Gretel.ai raised $50m to support its platform for synthetic datasets. In July 2021 the British firm Mindtech received $3.25m to develop a service for training computer-vision algorithms with generated data.

Another important trend is 3D-scene modelling. Special algorithms, using photos taken from multiple angles, can reconstruct a scene in three dimensions.

The technology is actively used in construction, robotics, animation, interior design and the military.

Researchers note that algorithms still struggle with complex textures, such as tree leaves. Even so, such tools could soon significantly ease the work of 3D designers.

What role does computer vision play in the metaverse?

For the metaverse, computer vision may prove pivotal—from virtual and augmented reality to recognising objects, people and spaces.

During its rebranding event, Meta (formerly Facebook) showcased realistic avatars, their environments and a neural interface to control them. Computer-vision technologies were among those used to create them.

At the Ignite 2021 conference, Microsoft presented its vision of the metaverse and unveiled Mesh for Teams for VR headsets, smartphones, tablets and PCs.

At its GTC 2021 conference, NVIDIA announced Omniverse Avatar for creating interactive 3D characters. It combines computer vision, natural-language processing and recommender systems.

What are the risks of computer vision?

Despite its obvious benefits for business and society, the technology can be misused.

Tools for creating deepfakes are developing rapidly. Techniques for faking photos and videos have long existed, but deep learning has made them easier to produce and far more convincing.

Fraudsters can use deepfakes to create fake pornographic videos or fake appearances by politicians and other celebrities.

In 2017 a Reddit user nicknamed DeepFake published several fake adult videos using the faces of such celebrities as Gal Gadot, Scarlett Johansson, Taylor Swift and Katy Perry.

That same year deepfakes were more often used to replace politicians: videos appeared online in which the face of Argentina’s president, Mauricio Macri, was swapped with Adolf Hitler’s, and Germany’s chancellor, Angela Merkel, with Donald Trump’s.

Computer-vision systems are often criticised for gender and racial bias, usually due to insufficiently diverse datasets.

In 2019 a Black resident of New Jersey spent ten days in jail because of a facial-recognition error. Other African Americans in other US cities have faced similar problems.

The technology is also criticised for excessive intrusion into privacy. Rights advocates say facial recognition in public places and tracking people’s movements via street cameras violates the right to privacy.

Developers and the public have suggested various remedies, from deepfake-detection systems to legislative bans on biometric identification. But there is still no consensus.

Subscribe to ForkLog on Telegram: ForkLog AI — all the news from the world of AI!

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!