Why Do AI Models Hallucinate? Insights from OpenAI

The company proposed ways to reduce neural network errors.

Language models tend to hallucinate because standard training and evaluation procedures encourage guessing rather than acknowledging uncertainty. This is detailed in an OpenAI research paper.

The company defined the issue as follows:

“Hallucinations are plausible but false statements generated by language models. They can occur unexpectedly even in response to seemingly simple questions.”

For instance, when researchers asked a “widely used chatbot” about the title of Adam Tauman Kalai’s doctoral dissertation (the author of the paper), it confidently provided three different answers, none of which were correct. When asked about his birthday, the AI gave three incorrect dates.

According to OpenAI, hallucinations persist partly because current evaluation methods set incorrect incentives, prompting neural networks to “guess” the next symbol in a response.

An analogy was drawn to a situation where a person, unsure of the correct answer to a test question, might guess and accidentally choose the right one.

“Suppose a language model is asked about someone’s birthday but doesn’t know it. If it guesses ‘September 10th,’ the probability of a correct answer is one in 365. The answer ‘I don’t know’ guarantees zero points. After thousands of test questions, a model based on guessing appears better on the scoreboard than a careful model that admits uncertainty,” the researchers explained.

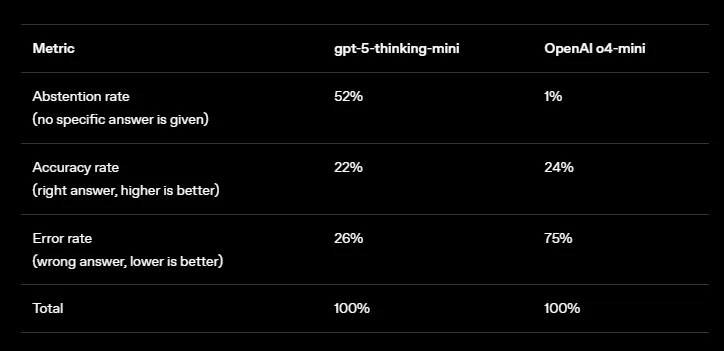

In terms of accuracy, OpenAI’s older model, o4-mini, performs slightly better. However, its error rate is significantly higher than that of GPT-5, as strategic guessing in uncertain situations increases accuracy but also raises the number of hallucinations.

Causes and Solutions

Language models are initially trained through “pre-training”—a process of predicting the next word in vast amounts of text. Unlike traditional machine learning tasks, there are no “true/false” labels attached to each statement. The model only sees positive examples of language and must approximate the overall distribution.

“It is doubly difficult to distinguish true statements from false ones when there are no examples labeled as false. But even with labels, errors are inevitable,” OpenAI emphasized.

The company provided another example. In image recognition, if millions of photos of cats and dogs are labeled accordingly, algorithms will learn to classify them reliably. But if each pet photo is sorted by birth date, the task will always lead to errors, regardless of how advanced the algorithm is.

The same applies to text—spelling and punctuation follow consistent patterns, so errors diminish as scale increases.

Researchers argue that it is not enough to simply introduce “a few new tests that account for uncertainty.” Instead, “widely used accuracy-based evaluations need to be updated to exclude attempts at guessing.”

“If the main [evaluation] scales continue to reward lucky guesses, models will continue to learn to guess,” OpenAI claims.

Back in May, ForkLog reported that hallucinations remained a major issue for AI.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!