Study Finds AI Image Generators Copy Images From Training Data

AI image-generation tools such as Stable Diffusion recall training images and generate near-identical copies. Gizmodo reports.

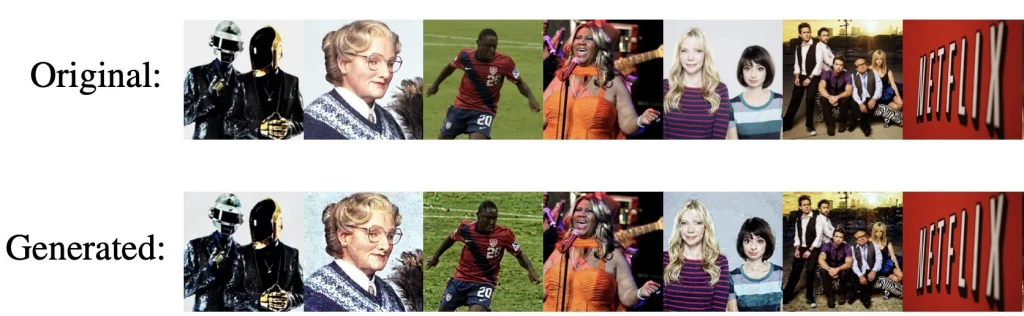

According to the paper, researchers extracted from the models more than a thousand training samples, including photographs of people, film frames, company logos and other images. The researchers found that AI can generate exact copies of these images with small alterations such as adding noise.

As an example, they cited a photograph of American preacher Anne Graham Lotz, taken from Wikipedia. When they entered the query in Stable Diffusion “Anne Graham Lotz”, the AI produced the same image with added noise.

Models such as Stable Diffusion are trained on copyrighted, trademarked, private, and sensitive images.

Yet, our new paper shows that diffusion models memorize images from their training data and emit them at generation time.

Paper: https://t.co/LQuTtAskJ9

👇[1/9] pic.twitter.com/ieVqkOnnoX

— Eric Wallace (@Eric_Wallace_) January 31, 2023

The researchers measured the distance between the pixels of the two images, finding them virtually identical.

The process of finding duplicates proved straightforward. The researchers repeatedly fed the same prompt. When the generator produced identical images, they manually searched the training set for the same picture.

The researchers noted that the memorization effect is rare. In total, they tested around 300,000 prompts. The analysis showed the memorization rate of generators to be just 0.03%.

Moreover, Stable Diffusion copies images less often than any other model. The researchers attribute this to deduplication of the training dataset.

The Imagen algorithm from Google is more prone to copying.

“The warning is that the model should generalize and generate new images, not output a memorized version,” said co-author Vikash Sehvag.

The study also found that as AI generators scale, the memorization effect will increase.

“No matter how new a model is, bigger and more powerful, the memorization risks will be far higher than today,” said co-author Eric Wallace.

The researchers argue that the diffusion generators’ ability to reproduce content may fuel copyright disputes. According to Florian Tramèr, a computer science professor at ETH Zurich, many companies provide licenses to share and monetize AI images. However, if a generator reproduces a copyrighted work, this could lead to conflicts.

Most images we extract are copyrighted. Very few (eg. the picture in Eric’s tweet) allow for free re-distribution (with attribution).

Not a lawyer, so I don’t know what this implies.

But you likely can’t make the (common) argument that these models don’t copy training data! pic.twitter.com/vVEahLA13C— Florian Tramèr (@florian_tramer) January 31, 2023

The study was conducted by researchers from Google, DeepMind, ETH Zurich, Princeton University, and the University of California, Berkeley.

Earlier in January, a group of artists filed suit against AI-generator developers over possible copyright infringement.

Follow ForkLog AI on Telegram: ForkLog AI — all the news from the AI world!

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!