Microsoft unveils Kosmos-1, a universal multimodal neural network

Microsoft presented the Kosmos-1 neural network, which combines text, images, audio and video content as inputs.

Researchers described the system as a ‘multimodal large language model’. In their view, such algorithms will form the basis of artificial general intelligence (AGI) capable of performing tasks at human level.

“As a fundamental part of intelligence, multimodal perception is necessary for achieving AGI with respect to knowledge acquisition and grounding in the real world,” the researchers said.

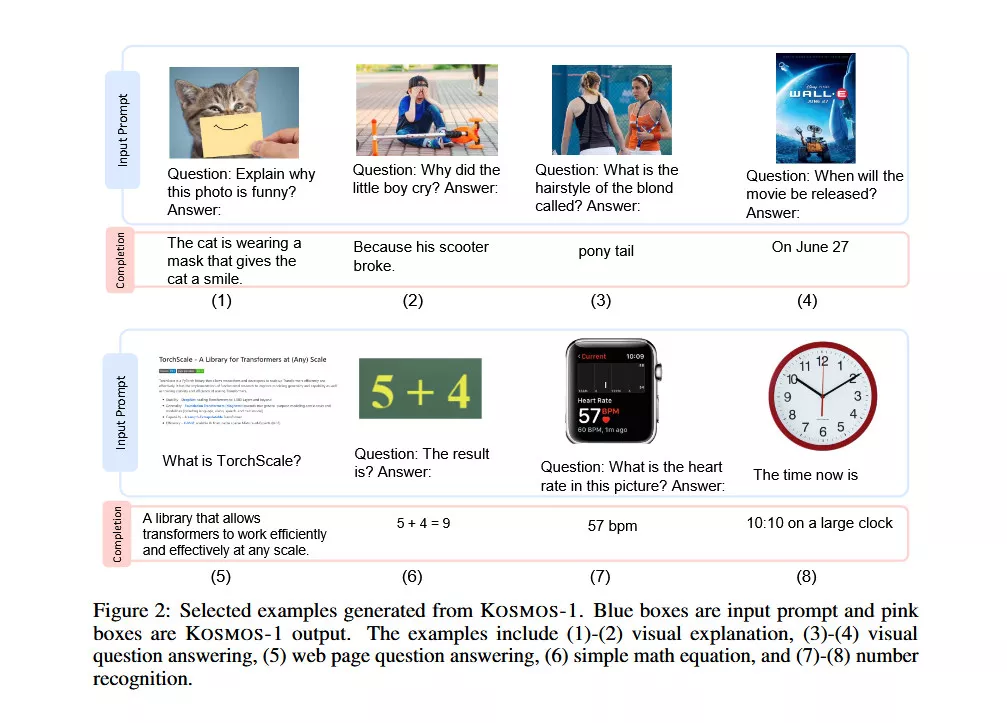

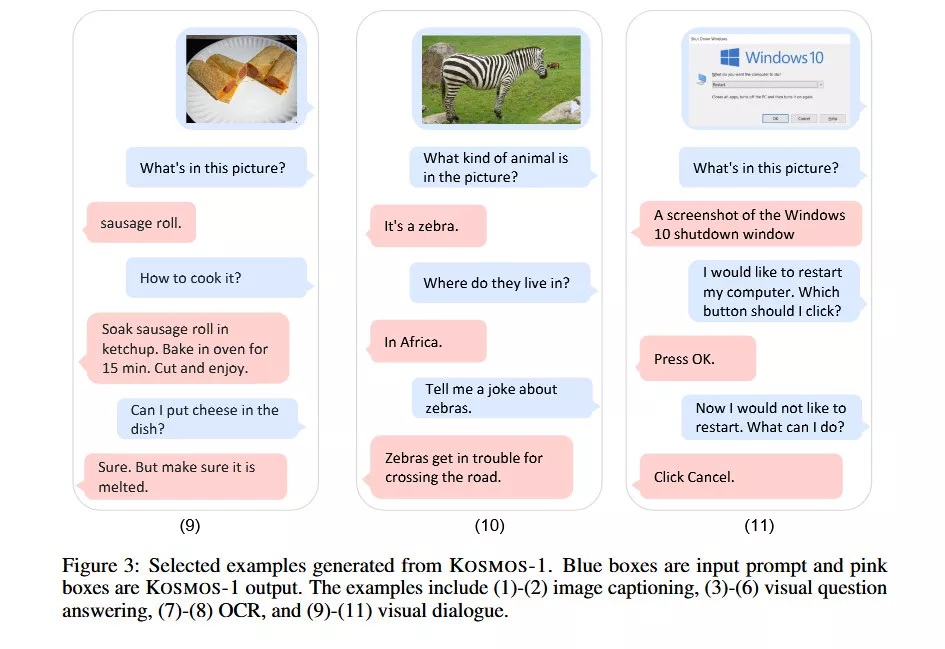

According to examples from the paper, Kosmos-1 can:

- analyze images and answer questions about them;

- read text from images;

- generate captions for images;

- pass a visual IQ test with an accuracy of 22–26%.

Microsoft trained Kosmos-1 on data from the internet, including the 800 GB English-language text resource The Pile and the Common Crawl web archive. After training, the researchers assessed the model’s capabilities across several tests:

- language understanding and generation;

- text classification without optical character recognition;

- image captions;

- visual question answering;

- answers to questions on a web page;

- zero-shot image classification.

According to Microsoft, in many of these tests Kosmos-1 outperformed contemporary models. In the near term, the researchers plan to publish the project’s source code on GitHub.

Earlier in January, Microsoft unveiled VALL-E, a human-voice synthesiser based on a short sample.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!