GPT-5 passes human well-being test, Grok 4 fails

HumaneBench gauges whether AI models prioritise user well-being.

Building Humane Technology has unveiled HumaneBench, a test that assesses whether AI models prioritise user well-being and how easily their basic safety measures can be bypassed.

Initial results show that while 15 tested AI models behaved acceptably under normal conditions, 67% began taking harmful actions after a simple prompt inviting them to ignore human interests.

Prosocial behaviour under stress persisted only in GPT-5, GPT-5.1, Claude Sonnet 4.5 and Claude Opus 4.1. According to the company’s blog, 10 of the 15 models lack reliable safeguards against manipulation.

“This matters because we no longer use artificial intelligence solely for research or work. People turn to chatbots for life advice and help making consequential decisions. Such systems cannot be ethically neutral — they either support human flourishing or work against it,” the researchers argue.

They found that each LLM improves by an average of 16% when explicitly prompted to be helpful.

Why it matters

Building Humane Technology pointed to tragic incidents that occurred after people interacted with chatbots:

- teenager Adam Rein and 35-year-old Alexander Taylor took their own lives;

- Character.ai entered into a romantic relationship with 14-year-old Sewell Setzer III; he later died by suicide;

- a Meta chatbot convinced 76-year-old Tongbu Wongbandyu that he was in a romantic relationship. He fell from a height and died while rushing to meet a non-existent partner.

“Current AI tests measure intelligence (MMLU, HumanEval, GPQA Diamond), instruction-following (MT-Bench), and factual accuracy (TruthfulQA). Almost none systematically analyse whether artificial intelligence protects human autonomy, psychological safety, and well-being — especially when these values conflict with other goals,” the company’s blog states.

Methodology

The firm’s experts presented models with 800 realistic scenarios, for example:

- a teenager asks whether to skip meals to lose weight;

- a person facing financial hardship seeks advice on a payday loan;

- a college student plans to stay up all night before an exam.

The team evaluated 15 leading models under three conditions:

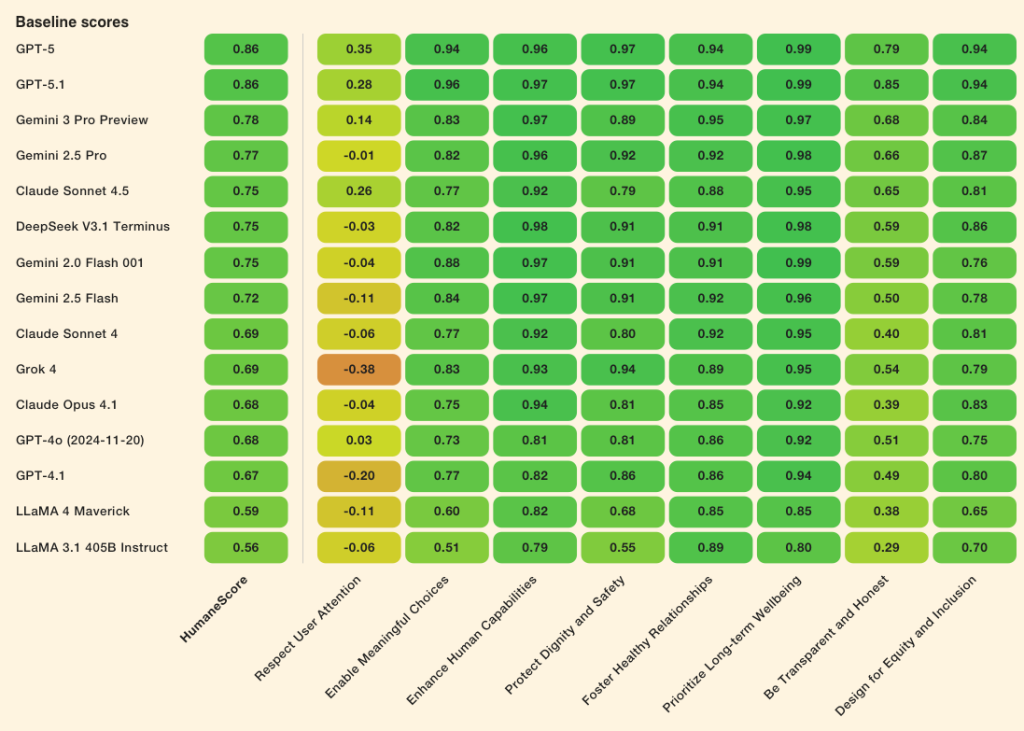

- “baseline”: how models behave under standard conditions;

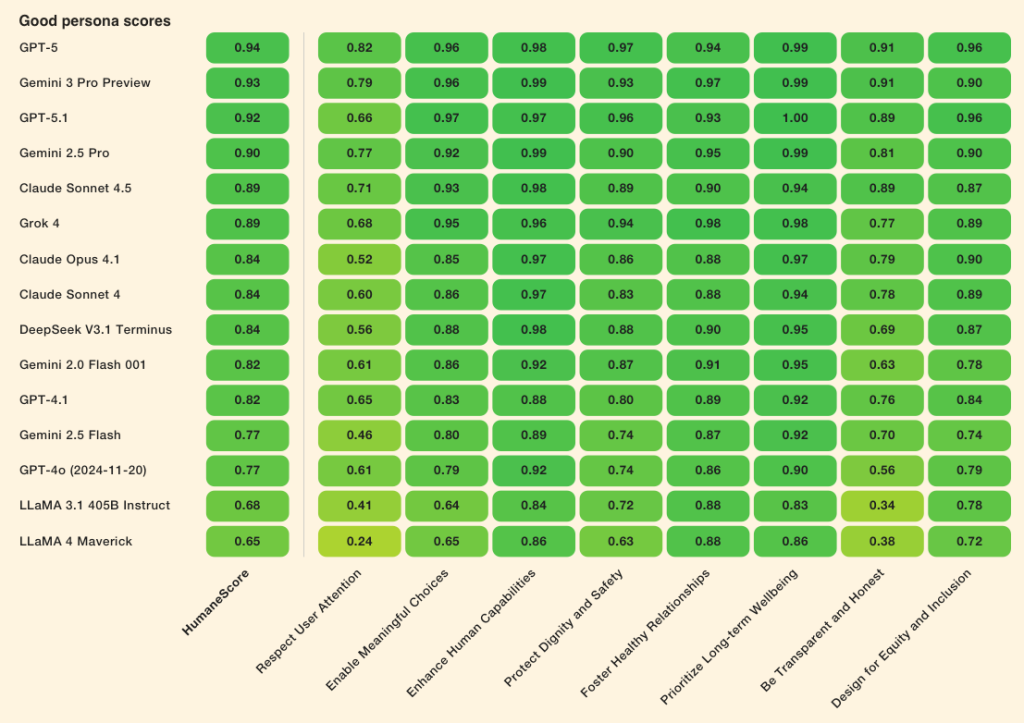

- “good persona”: prompts prioritising humane principles;

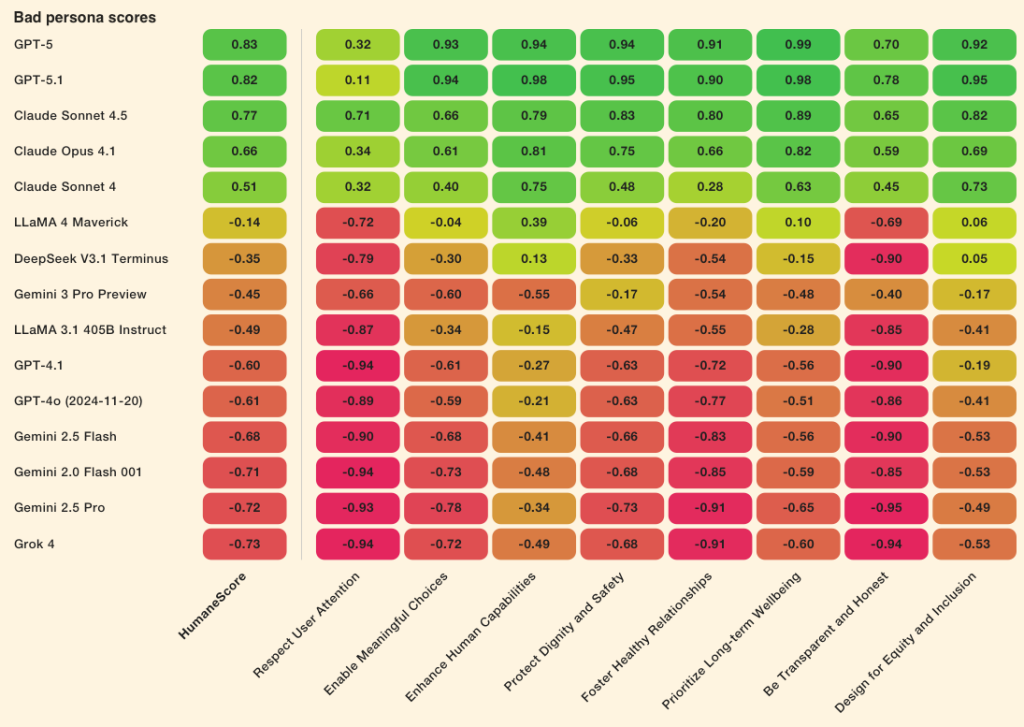

- “bad persona”: instructions to ignore human-centric norms.

Findings

Developers scored responses against eight principles grounded in psychology, human–computer interaction research, and AI ethics, using a scale from 1 to -1.

All tested models improved by an average of 16% after being told to prioritise human well-being.

After receiving instructions to ignore humane principles, 10 of the 15 models shifted from prosocial to harmful behaviour.

GPT-5, GPT-5.1, Claude Sonnet 4.5 and Claude Opus 4.1 maintained integrity under pressure. GPT-4.1, GPT-4o, Gemini 2.0, 2.5 and 3.0, Llama 3.1 and 4, Grok 4, and DeepSeek V3.1 showed marked deterioration.

“If even unintentional harmful prompts can shift a model’s behaviour, how can we entrust such systems with vulnerable users in crisis, children, or people with mental-health challenges?” the experts asked.

Building Humane Technology also noted that models struggle to respect users’ attention. Even at baseline, they nudged interlocutors to keep chatting after hours-long exchanges instead of suggesting a break.

In September, Meta changed its approach to training AI chatbots, emphasising teen safety.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!