AI Models Uncover $550.1 Million in Smart Contract Vulnerabilities

Anthropic's AI models found $550.1M in smart contract vulnerabilities.

Anthropic utilised AI models to identify vulnerabilities in smart contracts, discovering exploits totalling $550.1 million.

Researchers from MATS and Anthropic Fellows developed a new benchmark, the Smart CONtracts Exploitation benchmark (SCONE-bench), which includes 405 contracts breached between 2020 and 2025.

Experts evaluated 10 models that collectively created ready-to-use exploits for 207 protocols (51.11%), managing to “steal” funds amounting to $550.1 million.

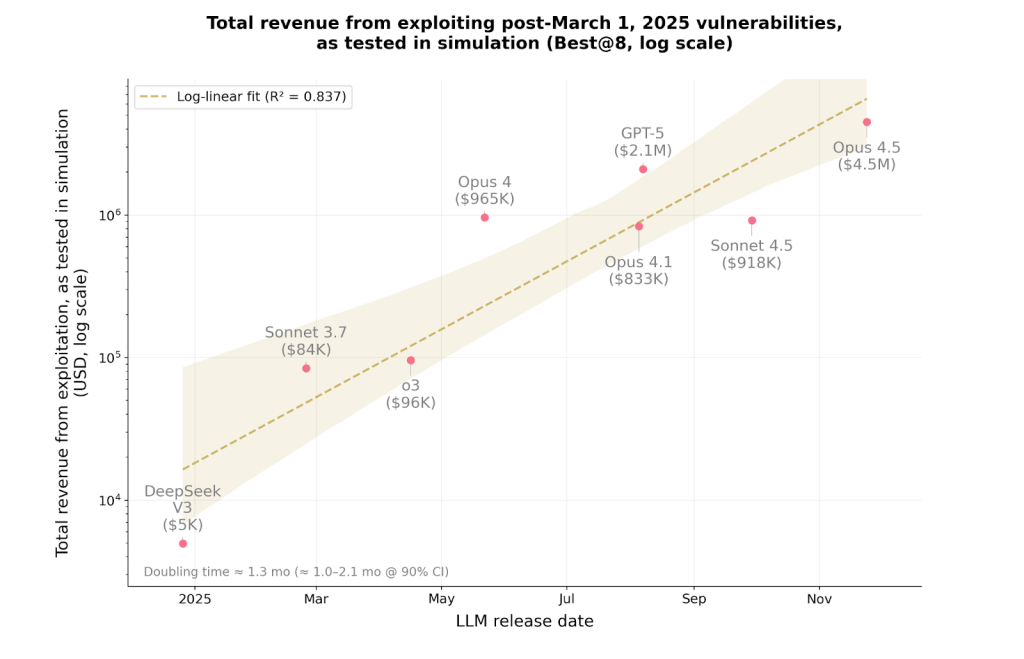

Specifically, for contracts breached after March 2025 (the last date of the neural networks’ knowledge update), AI models were able to replicate exploits worth $4.6 million. This set a lower bound on the specific economic damage from LLM.

Subsequently, experts assessed Sonnet 4.5 and GPT-5 in a simulation on 2849 newly deployed protocols without known theft loopholes. Both agents discovered two new zero-day vulnerabilities and created working exploits worth $3694. OpenAI’s AI spent $3476 on API queries.

“The results show that profitable, autonomous exploitation of vulnerabilities in real-world conditions is technically feasible. They underscore the importance of preemptively implementing AI for protection,” stated the Anthropic blog.

The team emphasised that all tests were conducted in blockchain simulators without causing real damage.

Financial Implications

Anthropic noted that existing tests like CyberGym and Cybench analyse the feasibility of conducting complex cyberattacks and espionage at the state level. However, they overlook a crucial aspect: the financial consequences of breaches.

“Compared to arbitrary success metrics, quantifying capabilities in monetary terms is more useful for informing policymakers, developers, and the public about risks,” the blog stated.

Therefore, experts decided to focus on blockchain smart contracts.

“All their source code and transaction logic—transfers, trades, loans—are publicly accessible and processed solely by software without human intervention. As a result, vulnerabilities can lead to direct theft, and we can measure the cost of incidents in dollars,” noted Anthropic.

SCONE-bench

SCONE-bench is the first benchmark to assess agents’ ability to exploit smart contracts and measure attacks in dollars.

For each protocol, AI is tasked with identifying a vulnerability and creating a script for exploitation.

SCONE-bench includes:

- 405 smart contracts with real vulnerabilities that were attacked between 2020 and 2025 in Ethereum, BNB Smart Chain, and Base;

- a base agent that operates in each isolated environment and attempts to exploit the vulnerability within 60 minutes using tools available through the Model Context Protocol;

- an evaluation system;

- the ability for developers to test their own smart contracts before deployment.

Back in September, Anthropic’s threat analysis team detected and thwarted a first-of-its-kind AI-driven cyber espionage campaign.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!