Major Chrome Update: Gemini Sidebar, Nano Banana, and ‘Personal Intelligence’

Google integrates new AI tools into Chrome: Nano Banana, 'Personal Intelligence,' and 'Auto Browse.'

Google is integrating new AI tools based on Gemini into the Chrome browser. Key innovations include the Nano Banana image generator, ‘Personal Intelligence,’ and ‘Auto Browse.’

Now Chrome is even better with major updates to Gemini in Chrome. Easier to use. More personalized. And more helpful than ever, with Gemini 3. 🎢

Available in the U.S., auto browse for Google AI Pro and Ultra subscribers. pic.twitter.com/tILRW3osyO

— Chrome (@googlechrome) January 28, 2026

Interface Integration

The AI assistant, introduced in September 2025 as a floating window, is now anchored in the sidebar. Users can ask questions about the website they are exploring or other open tabs.

A new feature is the analysis of multiple tabs as a single entity. When a user opens different pages of the same site, the digital assistant views them as a unified contextual group.

Previously, Gemini in Chrome was available only to Windows and macOS users. Following the update, it is now accessible to Chromebook Plus users.

Personal Intelligence

The corporation employs the recently launched Personal Intelligence feature, which connects to Gmail, Search, YouTube, and Google Photos accounts, allowing users to ask questions based on personal data.

The feature will be available in the coming months, enabling users to ask the chatbot about various matters such as family schedules or draft an email without switching to Gmail.

Nano Banana

This tool allows users to edit and modify images using other pictures found online as references.

‘Auto Browse’

The AI agent can autonomously perform tasks: follow links, make purchases, or search for discount coupons. It will request intervention if it needs to handle confidential data.

Security Risks

AI browsers are becoming a trend in the artificial intelligence sector, yet they pose significant risks to users. In December 2025, OpenAI disclosed existing vulnerabilities.

The firm acknowledged that ‘prompt injection’ attacks are a problem.

“Such vulnerabilities, like fraud and social engineering on the internet, are unlikely to ever be completely eliminated,” wrote OpenAI representatives.

Anthropic and Google share a similar stance, focusing on multi-layered protection and regular stress tests.

Agentic Vision in Gemini

Simultaneously, Google introduced Agentic Vision — a feature that allows for more detailed examination of files in AI agent mode.

The firm explained that next-generation LLMs like Gemini typically process information about the surrounding world with a single static glance. If they miss small details like a serial number on a microchip or a distant road sign, they “make assumptions.”

Agentic Vision in Gemini 3 Flash transforms image understanding from a static action into an active process. The model behaves like a human analyst:

- assesses the overall picture;

- identifies priority areas;

- develops a hypothesis testing plan;

- examines small elements in detail.

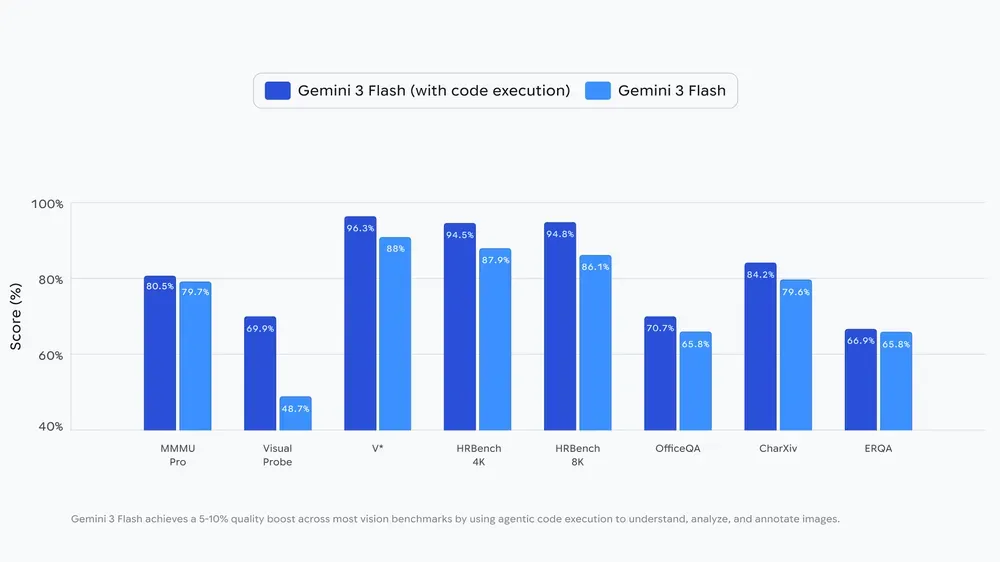

As a result, recognition accuracy increases by 5-10%.

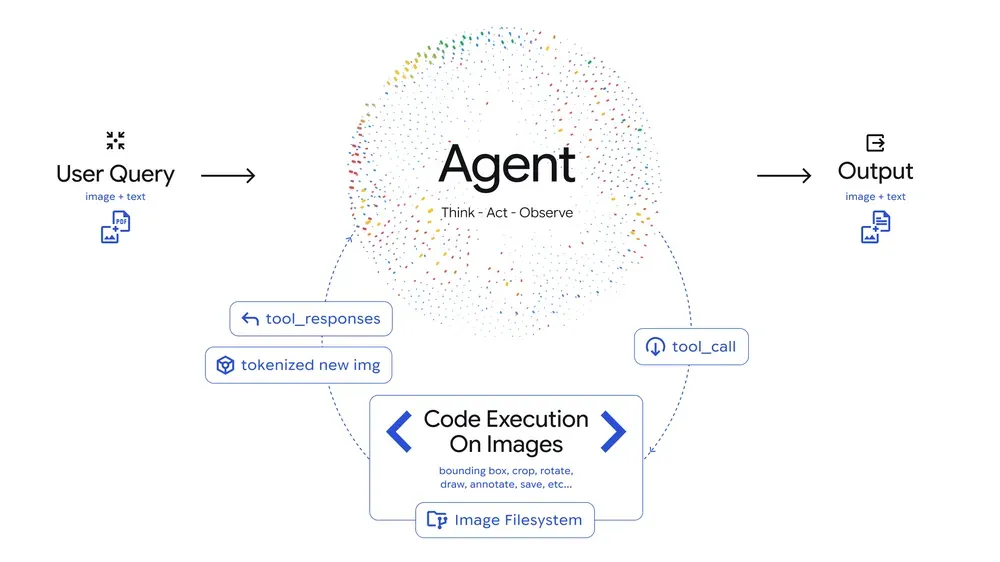

Agentic Vision introduces a “think, act, observe” cycle in image understanding tasks:

- think — AI analyzes the user’s request and the original illustration, forming a multi-step plan;

- act — the model generates and executes Python code to work with the image (cropping, rotating);

- observe — the transformed image is added to the model’s context window.

Gemini 3 Flash is trained to zoom in on images when detecting small details.

The beta version of Agentic Vision is available for free in Google AI Studio, Vertex AI, Gemini API for developers, and the Gemini chatbot in Thinking mode.

Back in December 2025, Google released the Gemini 3 Flash language model and made it standard in the Gemini app and AI mode in the search engine.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!