The silicon curtain

GPUs, RAM and SSDs — what next?

The age of digital abundance—when any enthusiast could assemble a home server to rival a small firm’s kit—is drawing to a close. Owning cutting-edge hardware is taking on an air of elitism amid rising memory-chip prices and lengthening pre-order queues.

In a new ForkLog piece we examine why graphics cards have become the lifeblood of the AI industry, why Nvidia seems to love gamers less, and why freelance designers are renting capacity from cloud DCs. The larger question: how will the chip crunch affect blockchain decentralisation, where SSDs and DRAM are often pivotal?

Techno-feudalism or a passing squall

Judging by recent remarks from AI-industry chiefs and memory-chip makers, the age of the powerful personal computer (PC) may be ebbing.

The tech world is still chewing over a 2024 talk by Amazon’s founder Jeff Bezos, who likened the PC to a home generator in the era of grid electricity. Some now hail him as a seer.

The latest hardware has become the prime compute for training and serving LLMs. AI is stripping warehouses of HBM chips, whose makers once catered chiefly to consumer SSDs and RAM. As component prices climb, the market could shed an entire class of budget devices as soon as this year.

In early February TrendForce researchers raised their chip-price forecasts, expecting contract prices for consumer DRAM to jump by 90–95% in the first quarter of 2026 amid the AI boom. The previous estimate was 55–60%.

Training LLMs also demands vast troves of data. Enterprise buyers have snapped up SSDs of 2 TB and above with high write endurance. Silicon vendors, seeing fatter margins in AI, are reorganising capacity.

At the end of 2025, Micron Technology—the memory leader and once a staunch defender of desktops—announced it would shutter its Crucial consumer line. Production will cease in the second quarter of 2026 after nearly 30 years of the brand’s life.

Micron also plans to ramp HBM output. The company invested $9.6bn in new capacity in Hiroshima, Japan.

On 12 February Samsung Electronics said it had begun shipping advanced HBM4 chips to unnamed customers—an effort to close the gap with rivals in critical components for Nvidia’s AI accelerators, including SK Hynix.

The world’s largest chipmaker sits in a bind: it is a key memory supplier to Nvidia while also leading in smartphones and consumer electronics. It must preserve rich-margin AI contracts without weakening its gadget franchise.

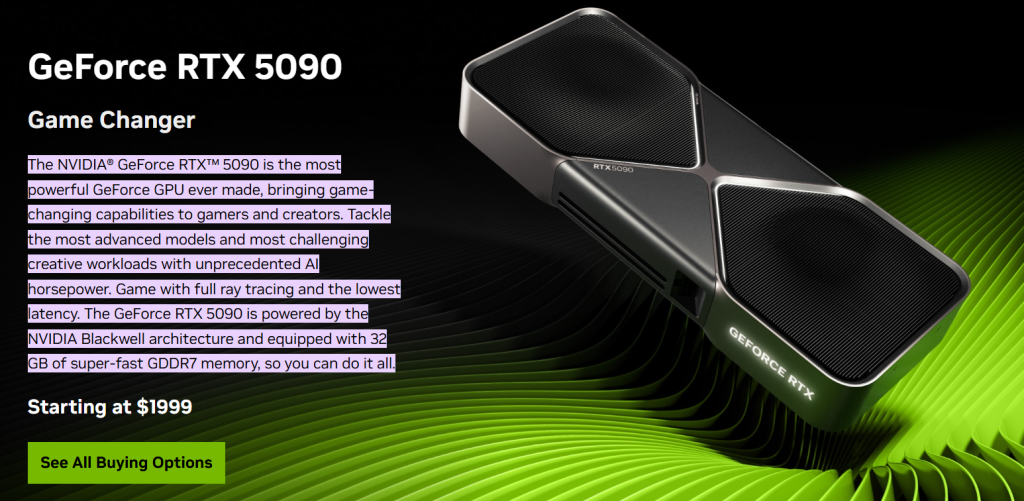

In September last year Samsung Semiconductor tried to strike a balance. The company confirmed that its top-end graphics-memory lines—GDDR7—can serve gamers and content creators as well as professional workstations.

These chips power Nvidia’s gaming flagship, the GeForce RTX 5090. Unveiled in January 2025, the card remains the unchallenged leader; last year’s $1,999 sticker bears little relation to reality. At the time of writing, listings range from $4,000 to $5,000.

China’s highly adaptive market, as usual, is seizing the opportunity. According to Nikkei Asia, memory makers CXMT and YMTC plan a major capacity build-out.

In 2027 they aim to open fabs in Shanghai and Wuhan, focusing first on DRAM and NAND rather than HBM, as market leaders do.

Ex-CIO/CTO of Bitfury Group and Hyperfusion co-founder Alex Petrov argues there is little sense in waiting for prices to fall; better to reallocate costs.

“Do not wait; we live here and now. If you need hardware for work, mining, a node, it is better to buy now, putting up with high prices, and set aside what you can temporarily do without. Deferred demand by 2028 may be huge and unpredictable; one can only hope for old DDR3/4 and the arrival of new DDR6,” the expert told ForkLog.

Why graphics cards?

Why did the graphics cards that ran Quake III Arena in 2000 and Fallout 4 in 2015 end up first commandeered by PoW mining and then swallowed by the AI industry? The answer lies in the nature of GPUs—best seen in contrast to the CPU.

A CPU is a genius that can tackle any software task: write poetry, do taxes, run an operating system. But it executes sequentially on each core.

By contrast, a GPU is a factory of thousands of simple workers. Each is less bright than the genius, but they act in parallel.

To render a frame, you must compute the colour of millions of pixels—millions of identical operations per second. The graphics chip was born for parallelism.

Something similar happened with PoW mining on GPUs. Mining is a kind of lottery: the device must try billions of nonces per second to find the right hash. GPUs were ideal for this, spurring the first wave of shortages before Ethereum moved to PoS in 2022.

GPUs then proved a boon for AI. Modern LLMs such as ChatGPT or Gemini are, in essence, giant matrices—tables of numbers. Training them is endless matrix multiplication to tune “weights” (connections between neurons).

The maths that paints water reflections in Cyberpunk 2077 is the same linear algebra that underpins neural-network training. But AI needs not only compute grunt; it needs staggering data throughput. Consumer graphics memory is not enough—HBM has taken its place, pricey and scarce, and now courted by every tech giant.

Nvidia spotted this in time and, starting with the Volta architecture, began adding “tensor cores” tuned to perform matrix multiplications for AI tasks.

GPUs by the hour—and the loss of offline

For at least the next two years, content producers, video editors, designers, gamers, programmers, AI architects—anyone whose work hinges on powerful hardware—must choose: rent online capacity or pay a steep premium to upgrade a PC.

With shortages and queues for some components, subscriptions are gaining traction, pushing cloud data centres to become more customer-friendly. A raft of firms now rents flexible compute and GPUs, including Lambda Labs, Vast.ai, Hyperfusion, LeaderGPU, Hostkey and others.

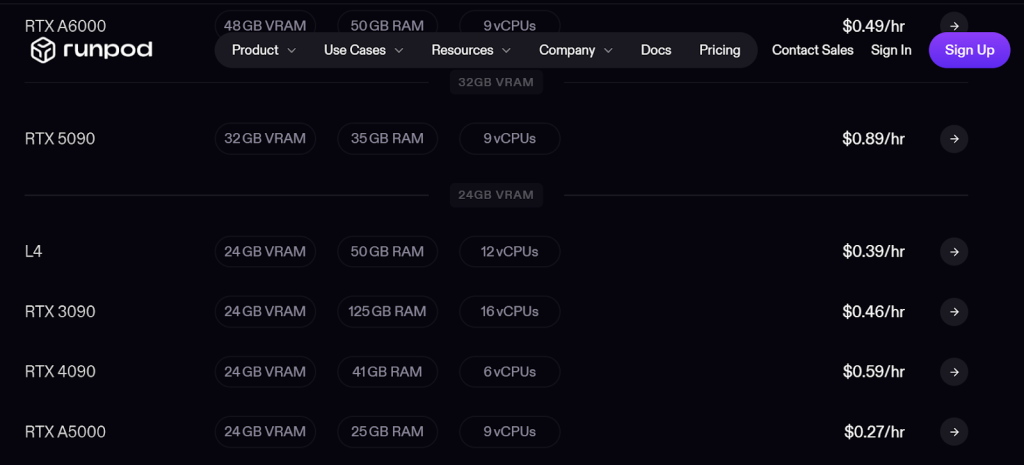

RunPod offers time on the scarce RTX 5090 at $0.89 per hour.

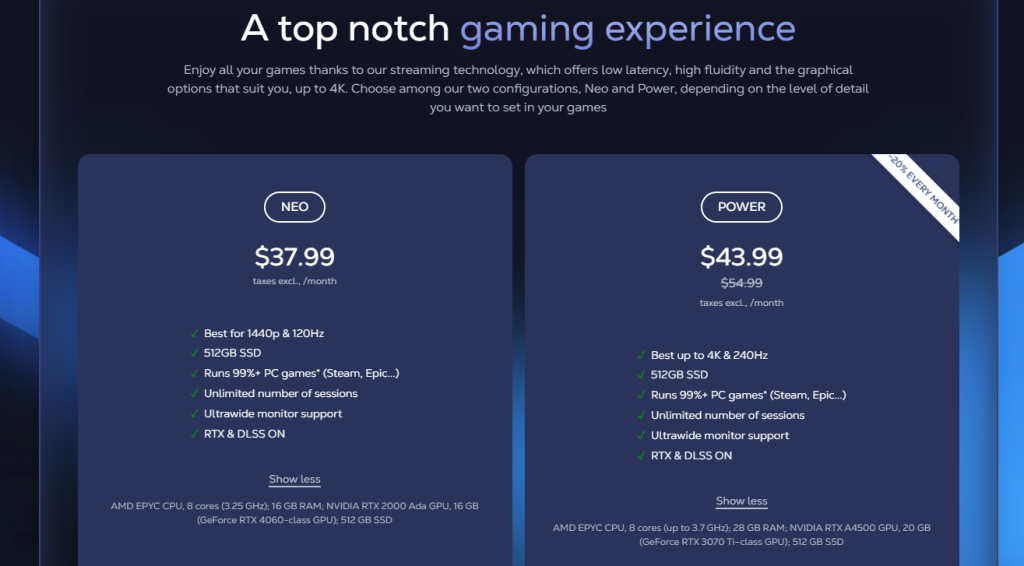

Shadow provides a remote desktop without limits on games or pro software for engineers and designers. By contrast, GeForce Now or Xbox Cloud offer less freedom—but at different price points.

Already, with a stable connection, a home smart TV can double as a powerful workstation if you rent the right “iron”. The trade-off: responsibility for quality and uptime shifts from users to owners of tech parks, who may prioritise larger clients and enforce sanctions.

Petrov noted that data centres guarantee 24/7 availability, backup power, redundant connectivity and proper maintenance quality.

“At the same time, you can quite well keep some things at home or at work. It is just often more expensive and less convenient,” he added.

According to him, many designers, video editors, producers and artists are already being displaced by AI. At some point they must lean on specialised AI apps that home hardware cannot handle.

“While LLM requirements grow exponentially, you can keep only small models on a phone or at home. Larger expert versions require a different scale, capacity and speeds, which is exactly what cloud data centers provide,” Petrov explained.

Bitcoin out in front again

The entire IT sector depends on components, but for blockchain the chip crunch poses a real threat to decentralisation and to the balance of power.

“The rise in memory prices is the consequence of decisions by individual commercial companies. Blockchain nodes are not the only ones affected; prices are rising for all devices with new DDR5 memory: smartphones, PCs, everything. This also forces blockchains to become smarter and more economical, to look for different paths and solutions for this,” the Hyperfusion co-founder believes.

He highlighted a paradox now dogging PoS networks:

“Proof-of-Stake reduced the energy consumption of mining, but shifted the burden from electricity to memory and disks for businesses and users. In conditions where components have become 3–5 times more expensive, PoS chains have found themselves in a ‘perfect storm’ of reality.”

Blockchains like Ethereum and Solana follow a principle of “easy to create, but extremely costly to verify”. Given many nodes and seven to nine proof steps, the validator barrier to entry in PoS networks is often lower at deployment but higher in operating expenses.

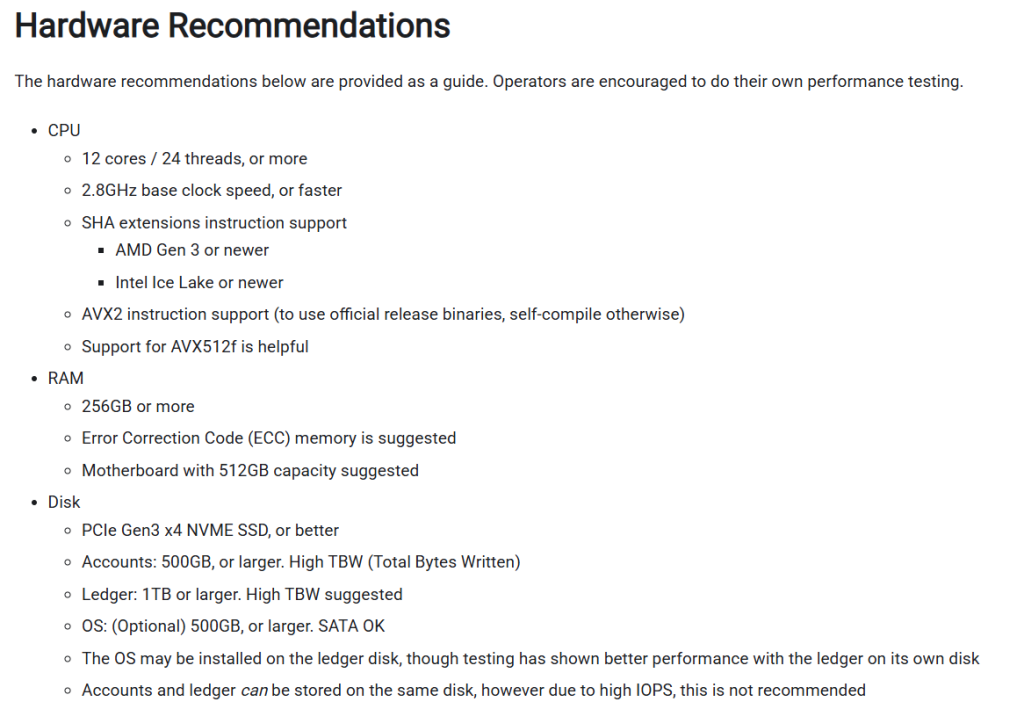

Petrov notes that in Ethereum each node must keep the entire database of accounts, contracts and balances in near-instant reach. That is tens of millions of constantly updated objects. For speed you need high-performance RAM and NVMe SSDs combined in a RAID array.

Nodes must process every block. In high-frequency networks (Solana — 400 ms, Ethereum — 12 s), signature verification and transaction execution demand huge resources. Requirements for full archive nodes are far higher: in Ethereum an archive node calls for 128 GB RAM/ from 12 TB SSD.

Falling validator margins as components get pricier creates a fresh centralisation risk. In January the daily number of active nodes on the Solana blockchain fell to 800—the lowest since 2021. As participation shrinks, small node operators struggle to cover voting and infrastructure costs unless they have ample delegated stake.

At the time of writing the network’s Nakamoto coefficient fell to 19 (in 2023 it was 33).

Within the Ethereum Foundation, ideas to lower infrastructure barriers are already under discussion. In May 2025 Vitalik Buterin proposed EIP-4444, which could materially reduce disk requirements. Nodes would store only the last 36 days of transaction history while retaining the current state and the structure of Merkle trees—cutting storage without impairing verification of the live chain.

In the new “silicon curtain” era, bitcoin remains the “people’s blockchain”.

“In bitcoin there is no state verification, only UTXO, which are easy to cache. The creation phase of PoW mining requires ASIC farms and huge energy-optimised capacity, but validation remains ultra-light. Verifying the result in PoW is extremely simple and fast—that is its beauty. Steps on a validator node: get the block data, check its hash, perform one or two hash operations, compare the target/difficulty, and everything is clear—yes/no,” Petrov explained.

For these reasons, a full bitcoin node can run on a modest server or desktop—and sometimes on newer Raspberry Pis with 4–8 GB of RAM. The impact of memory shortages on PoW nodes is minimal. SSD prices are rising, but capacities up to 1 TB remain within reach, he added.

What next?

Petrov argues the era of personal hardware is not over—just different approaches for different jobs:

“I like the quote ‘Cloud is someone else’s computer’ — ‘The cloud is just someone else’s computer on the network’.”

Industry is scrambling for a way out of the chip crunch, developing new technologies:

- MRAM (Magnetoresistive RAM) is non-volatile. It is roughly 1,000 times faster than SSDs and more reliable than conventional ones. By 2026 it began to replace memory in critical systems (cars, space);

- CXL 3.1 (Compute Express Link) lets servers “share” RAM over a network. A boon for data centres—while binding users more tightly to the cloud.

This is not the first chip crisis, but it is the most structural. Memory has faced similar shocks before:

- 1986. The US forced Japan into an agreement that set a price floor for memory chips. DRAM prices tripled in a year. American PC makers (Commodore, Apple) nearly went bust, and Intel quit memory to focus on processors;

- 2011. Thailand’s floods. Western Digital plants producing 40% of the world’s HDDs were submerged. Prices spiked by 190% and took two years to normalise.

AI’s exponential rise makes the market’s next move hard to read. New capacity coming online could ease the crunch by 2028 if today’s pace holds.

If AI agents become the economy’s backbone, chip demand will outstrip supply growth. In that world, owning a powerful PC will be as elite a hobby as keeping a thoroughbred horse. Whatever the future brings, change your thermal paste on time.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!