Cerebras breaks record for training the largest AI model on a single device

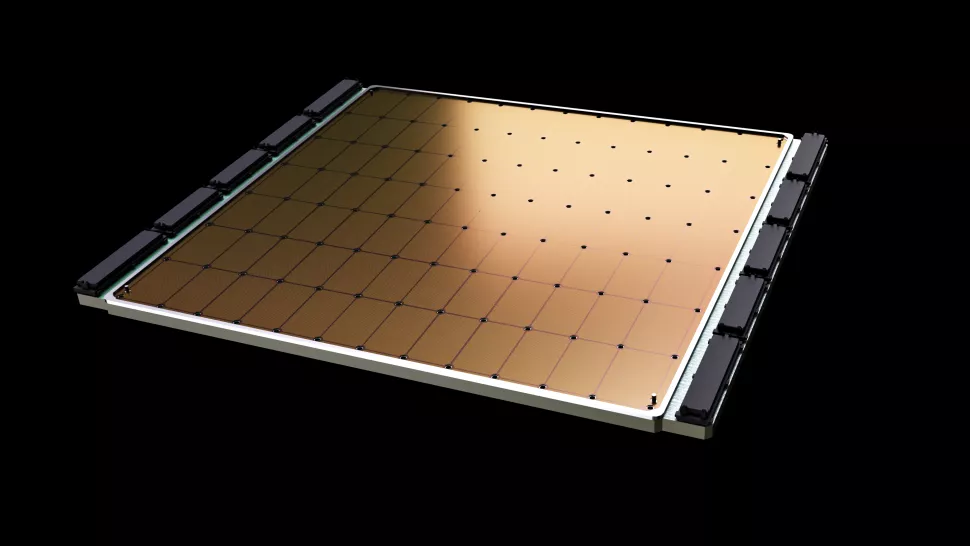

An American startup Cerebras trained the “largest AI model” artificial intelligence on a single device, equipped with the Wafer Scale Engine 2 (WSE-2) chip the size of a plate. Tom’s Hardware reports.

“Using Cerebras’ software platform (CSoft), our customers can easily train modern GPT language models (such as GPT-3 and GPT-J) with up to 20 billion parameters in a single CS-2 system,” the company said.

According to the startup’s representatives, Cerebras Weight Streaming separates compute resources, enabling memory to scale to any amount needed to store the rapidly growing number of parameters in AI workloads.

“Models running on a single CS-2 are tuned in minutes, and users can quickly switch between them with only a few keystrokes,” the statement said.

Storing up to 20 natural-language processing models with billions of parameters on a single chip significantly reduces the training and scaling overhead of thousands of GPUs, the company said. They added that this is one of the most challenging aspects of NLP workloads, taking months to complete.

The Wafer Scale Engine 2 chip is built on a 7-nanometer process, contains 850,000 cores, has 40 GB of on-chip memory with a bandwidth of 20 PB/s and consumes around 15 kW.

In April 2021, Cerebras unveiled the WSE-2 processor, designed for computations in the field of machine learning and artificial intelligence.

In August, the company built the CS-2 supercomputer. A CS-2-based installation can train an AI model with 120 billion parameters.

In May 2022, the Top500 was led by the American Frontier system, developed by Oak Ridge National Laboratory. This was the first installation to reach a peak of 1.1 exaflops in the Linmark benchmark.

Subscribe to ForkLog’s News on Telegram: ForkLog AI — all the news from the world of AI!

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!