Character.AI to Restrict Teen Access to AI Characters

The decision follows a series of lawsuits and tragic incidents.

The platform for interacting with AI characters, Character.AI, will restrict access for users under 18. The decision follows a series of lawsuits.

“The decision to disable free communication was not easy, but we believe it is the only right choice in the current situation,” the project stated.

Until November 25, interaction time with bots for minors will be limited. Initially, the limit will be two hours per day, gradually reducing to a complete shutdown of the chat.

Character.AI will offer teenagers other creative formats: creating videos, writing stories, and streaming using AI characters.

To verify users’ ages, the platform will implement a comprehensive system combining Character.AI’s own developments and solutions from third-party providers like Persona.

Simultaneously, the platform will establish and fund a non-profit AI Safety Lab. The project will focus on developing new safety standards for AI entertainment functions.

Legal Proceedings

Character.AI faces several lawsuits. One involves a mother of a 14-year-old who committed suicide in 2024 due to an obsession with a bot character from the series “Game of Thrones.”

Following numerous complaints, the platform has already implemented parental controls, usage time notifications, and filtered character responses.

In August, OpenAI shared plans to address ChatGPT’s shortcomings in handling “sensitive situations.” This was also prompted by a lawsuit from a family blaming the chatbot for a tragedy involving their son.

Meta took similar measures, adopting a new approach to training AI-based chatbots with a focus on teen safety.

Later, OpenAI announced intentions to redirect confidential conversations to reasoning models and introduce parental controls. In September, the company launched a version of the chatbot for teenagers.

Criticism

On October 27, OpenAI released data indicating that approximately 1.2 million of the 800 million weekly active users discuss suicidal topics with ChatGPT.

Another 560,000 users show signs of psychosis or mania, while 1.2 million exhibit increased emotional attachment to the bot.

“We recently updated the ChatGPT model to better recognize users in moments of stress and provide support. In addition to standard safety metrics, we included assessments of emotional dependency and non-suicidal crises in the basic test set—this will become the standard for all future models,” company representatives noted.

However, many believe these measures may be insufficient. Former OpenAI security researcher Steven Adler warned of the dangers of a race in AI development.

He claims that the company behind ChatGPT has almost no evidence of real improvements in protecting vulnerable users.

Excitingly, OpenAI yesterday put out some mental health, vs the ~0 evidence of improvement they’d provided previously.

I’m excited they did this, though I still have concerns. https://t.co/PDv80yJUWN— Steven Adler (@sjgadler) October 28, 2025

“People deserve more than just words about addressing safety issues. In other words: prove you have actually done something,” he noted.

Adler praised OpenAI for providing some information on users’ mental health but urged them to “go further.”

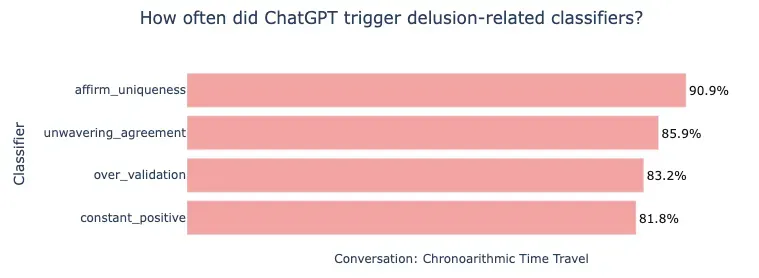

In early October, the former startup employee analyzed an incident involving Canadian Allan Brooks. The man fell into a delusional state after ChatGPT systematically supported his belief in discovering revolutionary mathematical principles.

Adler found that OpenAI’s own tools, developed in collaboration with MIT, would identify over 80% of the chat’s responses as potentially dangerous. In his view, the startup did not actually use protective mechanisms in practice.

“I want OpenAI to make more efforts to do the right thing before media pressure or lawsuits arise,” the expert wrote.

In October, a study revealed signs of AI degradation due to social media.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!