Engineers speed up neural-network training on CPUs by more than twice

Israeli AI startup Deci announced breakthrough performance in deep learning using central processors (CPUs).

The news is out! 🎉 We’re excited to announce that our family of image classification models called DeciNets reached a new level of industry-leading performance on large CPUs including Intel’s Cascade Lake. /1 pic.twitter.com/aCKGBDFpGo

— Deci AI (@deci_ai) February 16, 2022

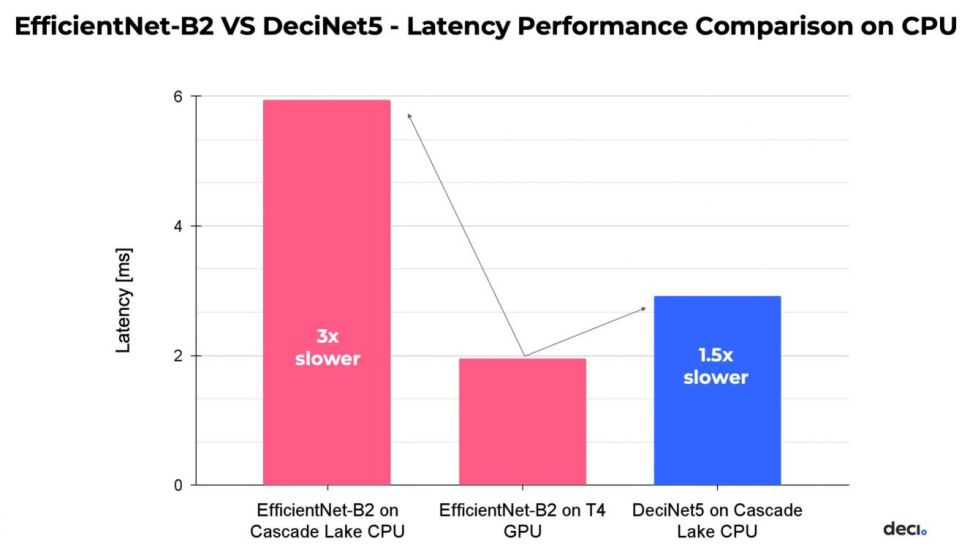

According to company representatives, Deci’s image-classification model is optimised for use on Intel Cascade Lake processors. It uses Deci’s patented Automated Neural Architecture Construction (AutoNAC) technology and runs on CPUs more than twice as fast and more accurately than Google’s EfficientNets on similar hardware.

Co-founder and CEO Yonatan Geifman said their aim is to develop not only more accurate models but also resource-efficient ones.

AutoNAC creates the best computer-vision models to date, and now the new class of DeciNet networks can be applied and run AI applications efficiently on processors,” he added.

The company also said that they have been working with Intel for almost a year on optimizing deep learning on Intel’s processors. Several Deci customers have already implemented AutoNAC technology in production sectors, they added.

Image classification and object recognition are among the core tasks for which deep-learning algorithms are used. Experts say that narrowing the performance gap between GPUs and CPUs will not only lower the cost of developing modern AI algorithms but also ease demand in the GPU-accelerator market.

In April 2021, researchers at Rice University developed a new deep-learning mechanism that trains neural networks on central processors 4–15 times faster than on GPUs.

In May, scientists using AI accelerated modeling of the Universe by 1,000 times.

Subscribe to ForkLog AI news on Telegram: ForkLog AI — all AI news!

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!