Google Integrates Gemini Chatbot on iPhone Lock Screen

- Google has updated the Gemini chatbot app, adding widgets to the iPhone lock screen and Control Center.

- The company has made the SpeciesNet AI model available, which helps identify animal species by analyzing photos from camera traps.

- Google has added the Data Science Agent AI to the Colab cloud platform.

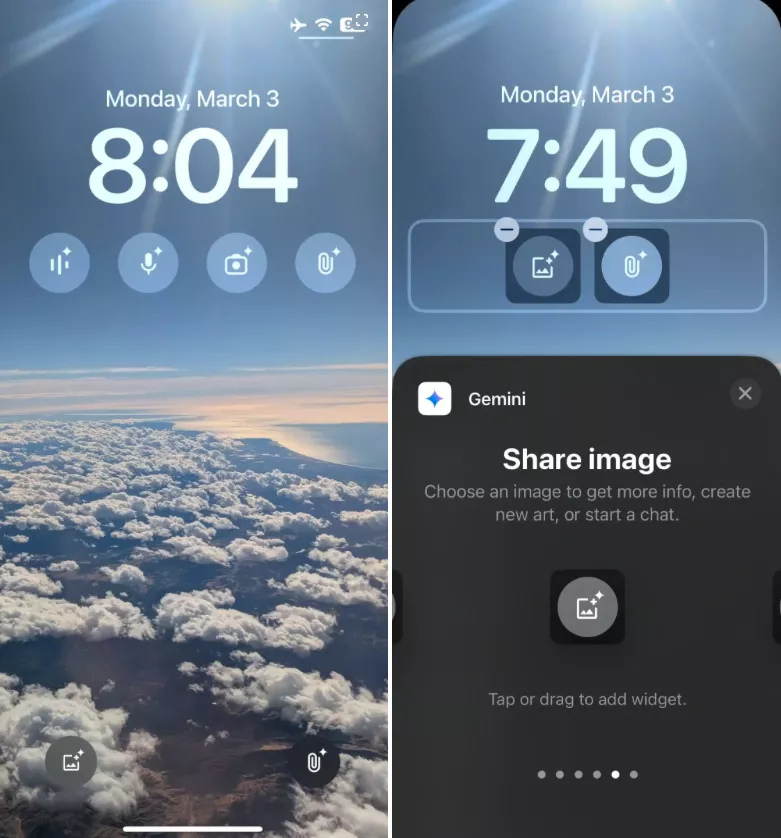

iPhone users can now access Google’s Gemini chatbot directly from the lock screen, thanks to a recent update, reports 9to5Google.

Following a redesign of Gemini on the iPhone, the app now features lock screen widgets and direct access to the Control Center.

The new quick access buttons provide:

- the ability to ask the chatbot questions via text;

- a voice communication function;

- microphone activation for setting reminders, creating events, and other tasks;

- camera use for taking photos and querying objects on the screen;

- a “Share Image” option;

- and the same capability for files.

Any of the buttons can be set on the lock screen and in the Control Center, accessible by swiping down from the top right corner.

Other Google Releases

Google’s updates to AI products did not stop there. The company released the SpeciesNet AI model, which aids in identifying animal species by analyzing photos from camera traps.

Researchers worldwide use camera traps—cameras connected to infrared sensors—to study wildlife populations. These can provide valuable insights, though analyzing the images can take days or weeks.

Many tools on the platform are powered by SpeciesNet, trained on over 65 million publicly available images. The model can classify an image into one of more than 2,000 categories.

“Conservation and biodiversity are crucial in combating climate change, and we look forward to supporting startups aimed at addressing this challenge,” the company blog states.

Colab Update

Google has added the Data Science Agent AI to the Colab cloud platform, designed for working with Python code, machine learning, and data analysis. The tool will help quickly clean data, visualize trends, find anomalies in API, analyze customer data, and write SQL code.

In the future, the agent may appear in other Google applications and services aimed at developers.

Back in March, the corporation announced the addition of the capability to ask questions using video and display content on the screen in real-time to the Gemini AI assistant.

In February, Google unveiled the new flagship AI model Gemini 2.0 Pro Experimental. The company also added the “thinking” neural network Gemini 2.0 Flash Thinking to the chatbot app.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!