Instagram to test Nudity Detector in private chats

Meta has begun developing a system to recognise nudity in photos sent via Instagram direct messages. The The Verge reports.

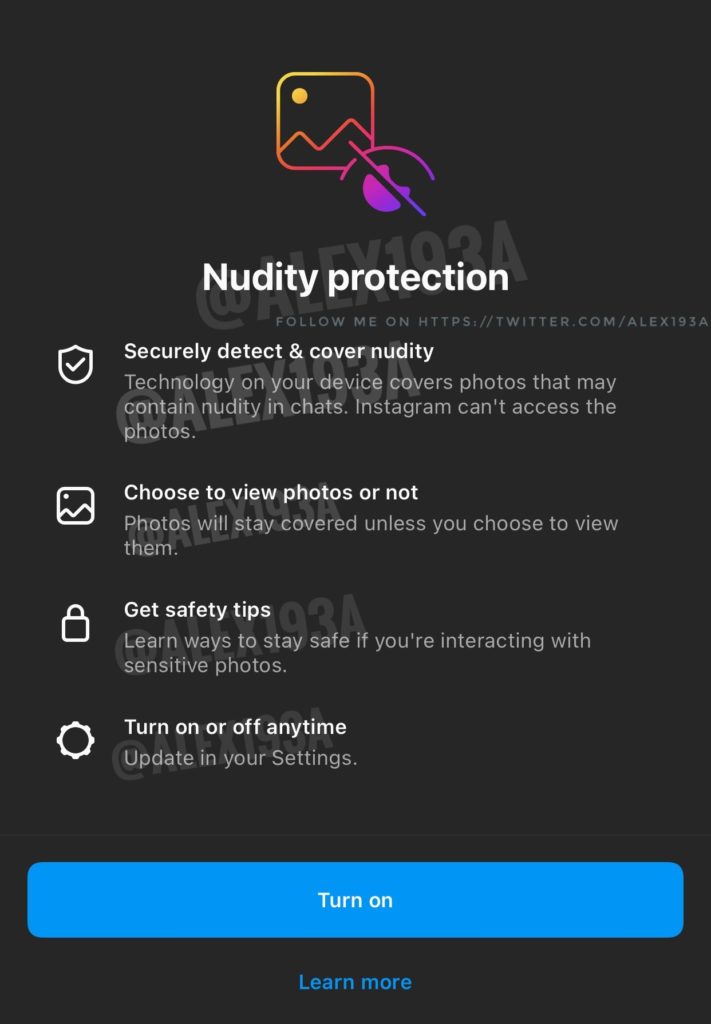

Researcher Alessandro Paluzzi posted on his Twitter a screenshot demonstrating the ‘Nudity Protection’ feature. According to Paluzzi, the technology hides photographs containing nudity and gives users the choice to open them or not.

Meta confirmed that the feature is in an early stage of development.

According to company representatives, the technology should protect users from nude photographs or other unwanted messages. Meta stressed that it would not be able to view the images or share them with third parties.

“We are working closely with experts to ensure that the new features safeguard people’s privacy and give them control over the messages they receive,” said a company spokesperson.

Meta said it would share more details about the feature in the coming weeks as testing begins.

Meta also noted that Nudity Protection is similar to the ‘Hidden Words’ option, launched in April 2021. It allows users to filter requests for conversations. If a message contains any of the selected filter words, the message is automatically placed in a hidden folder. Such requests are not deleted entirely, and the user can decide themselves whether to read them or not.

In July 2022, British authorities backed the idea of scanning users’ smartphones for CSAM material.

In December 2021, Apple added to iOS 15.2 a feature that detects intimate photos in Messages.

Subscribe to ForkLog news on Telegram: ForkLog AI — all the news from the world of AI!

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!