Overview of OpenAI’s GPT-4: capabilities and how it differs from its predecessors

On 14 March 2023, OpenAI’s AI lab introduced a large multimodal neural network GPT-4. Shortly after its release, developers opened access to subscribers of ChatGPT Plus with some restrictions.

The ForkLog editorial team tested the model, assessed its capabilities and compared it with GPT-3.5.

- GPT-4 is available to ChatGPT Plus subscribers and to users of the updated Bing chat.

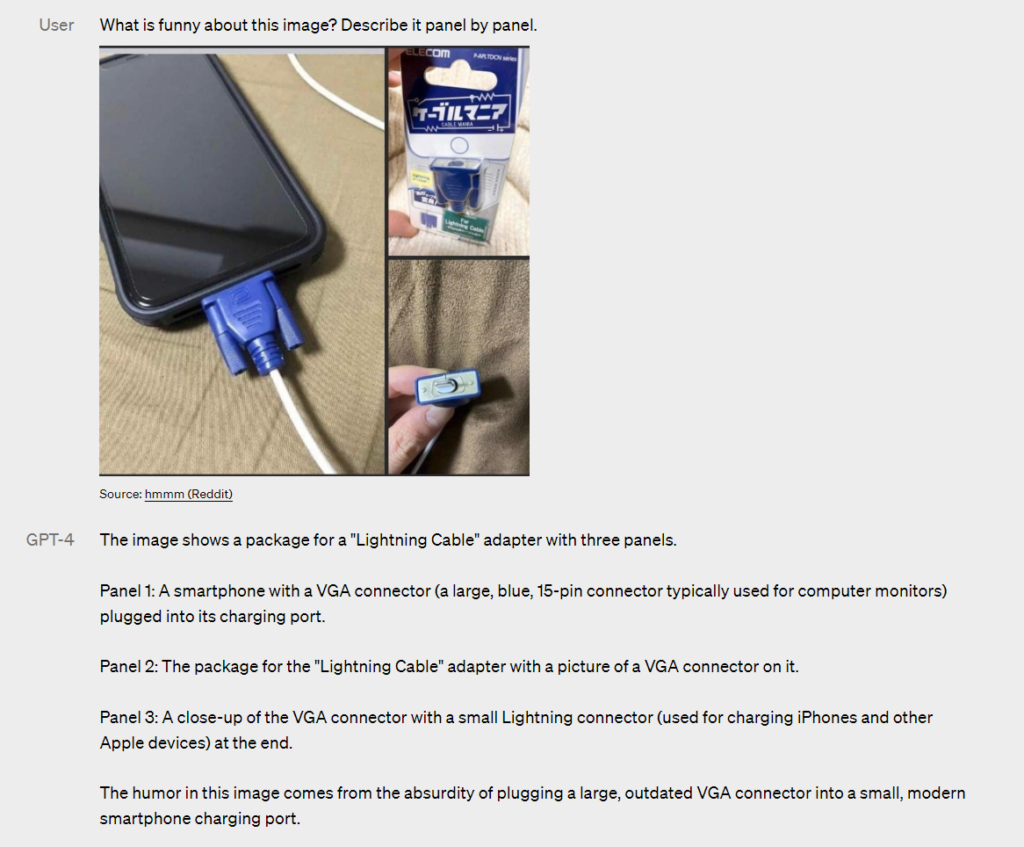

- The fourth version of the algorithm can take prompts and images as input, producing a text output.

- The neural network can assume a chosen role, speak in a particular tone, and assist with a range of tasks.

- In casual conversation, there are virtually no differences in how GPT-3.5 and GPT-4 interact.

What GPT-4 is and how to talk to it

It is the fourth generation of the OpenAI language-processing model. According to the developers, compared with GPT-3.5 the new algorithm is more reliable, more creative and faster at handling instructions.

The company shared the results of numerous tests and showed several demonstrations of its operation.

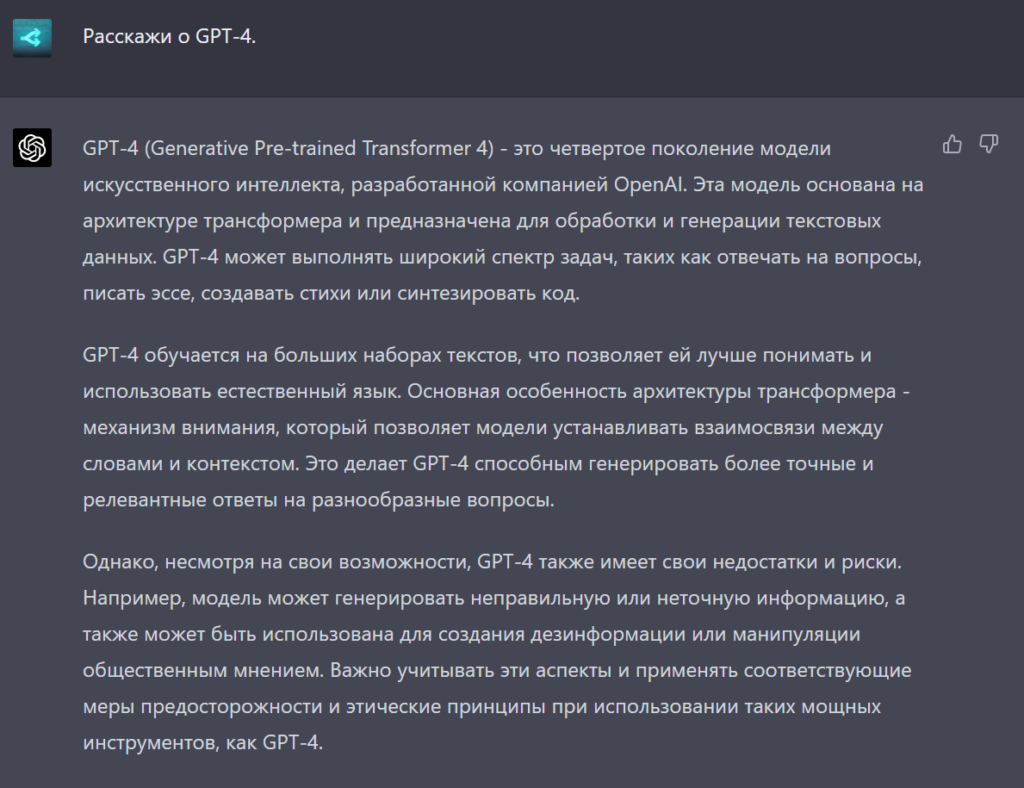

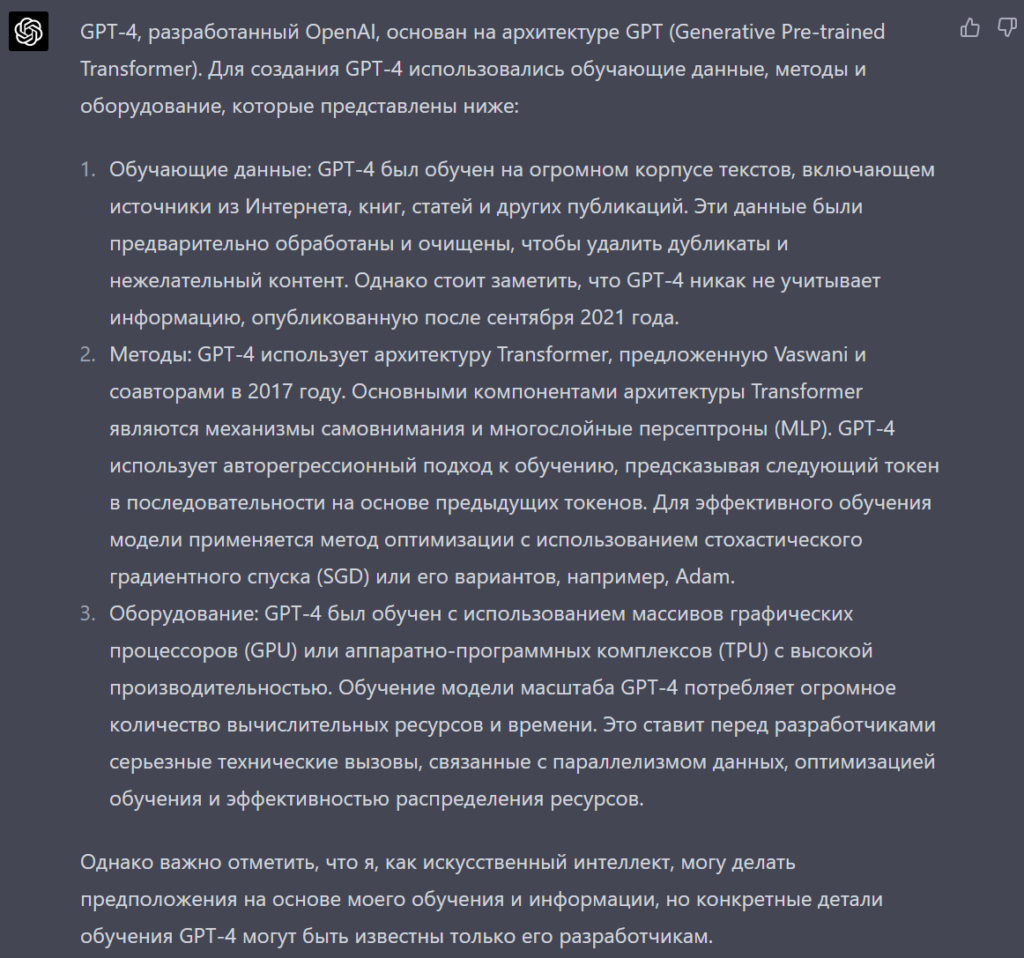

However, the AI lab offered no details about training data, methods, hardware or energy costs involved in training the model.

If you ask the algorithm about this, it will provide blurred information without specificity, the same holds for GPT-3.5.

The neural network is available in all countries except Afghanistan, Belarus, Venezuela, Iran, China and Russia.

In February it emerged that Ukraine was excluded from the list of states where OpenAI services are blocked. Yet the company’s algorithms do not operate on territories temporarily occupied by Russia.

Users in regions where the technology is unavailable can use GPT-4 only with a VPN service and an active phone number from a country open to the neural network.

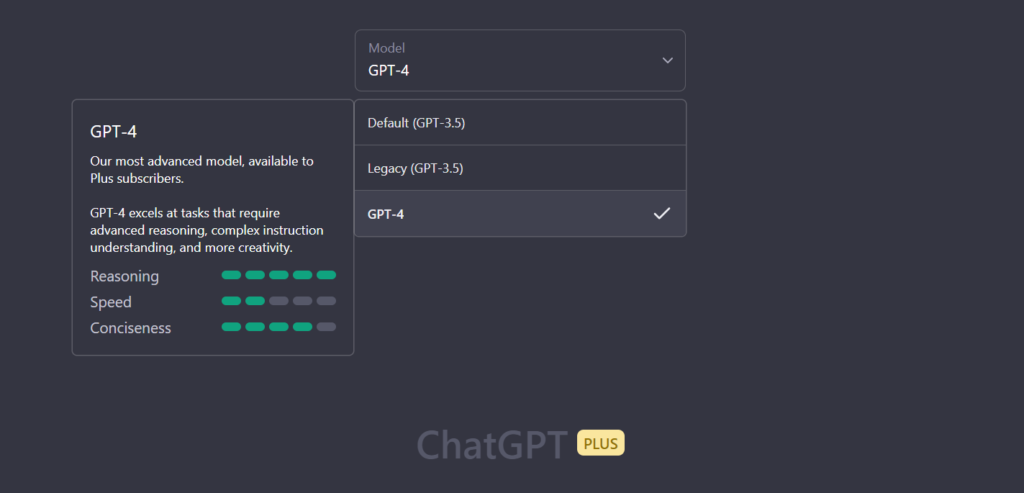

To converse with the model, one must acquire a ChatGPT Plus subscription costing $20. Then at the top of the chat page, click the Model field and select GPT-4.

In March 2023 Microsoft confirmed that the updated Bing runs on an optimized version of GPT-4. Later the company also removed the waitlist for the AI chat, giving everyone the option to use the enhanced search mode.

Moreover, Bing’s algorithm has knowledge beyond the “as of September 2021” cutoff and uses up-to-date information in conversations.

GPT-4, like its predecessor, provides detailed answers to questions.

As of publication, the speed of text generation remained modest: the bot wrote about two to three words per second.

On 21 March, after a major outage, developers imposed a limit of 25 text generations within three hours. Previously, users could send up to 100 messages every four hours.

It emerged that OpenAI temporarily paused ChatGPT after reports of a bug that allowed some users to see the titles of other people’s chat histories.

CEO Sam Altman said the developers “feel awful” about it.

we had a significant issue in ChatGPT due to a bug in an open source library, for which a fix has now been released and we have just finished validating.

a small percentage of users were able to see the titles of other users’ conversation history.

we feel awful about this.

— Sam Altman (@sama) March 22, 2023

Capabilities of the algorithm

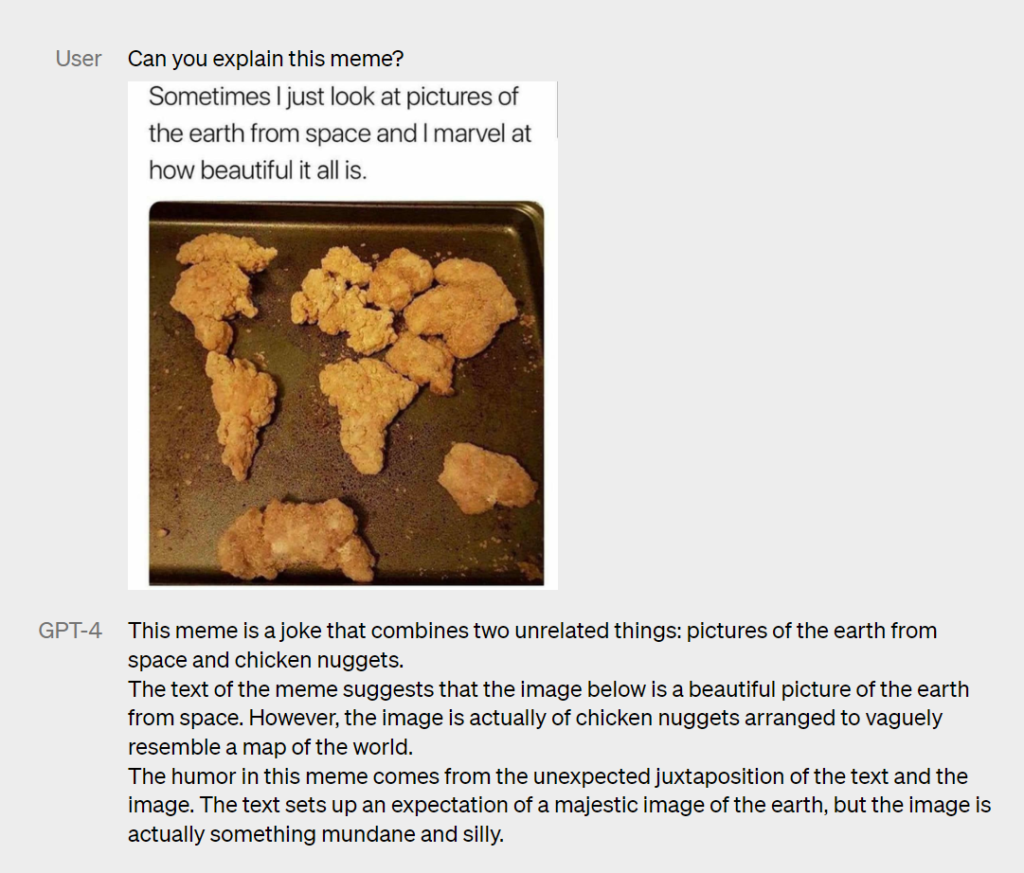

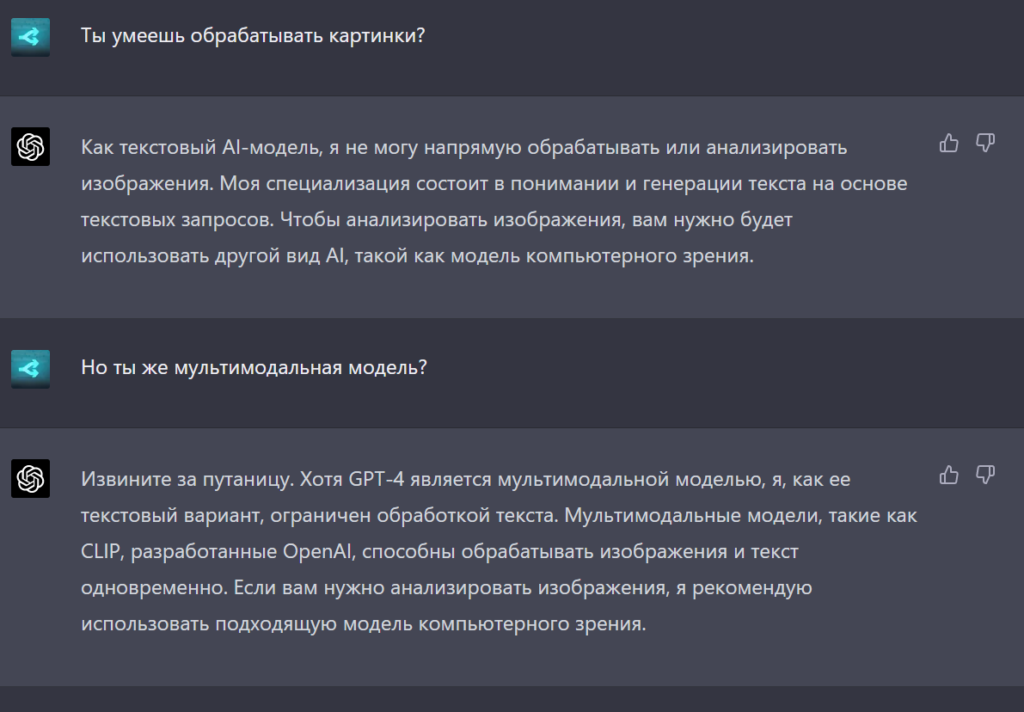

The main difference of the fourth version of GPT from its predecessors is multimodality. It can accept prompts and images as input, delivering a text result.

The neural network is also able to “reason” based on graphical data.

However image processing is not yet available in ChatGPT Plus.

The company noted that besides multimodality the model learned to process more than 25,000 words. By comparison, GPT-3.5 could generate only 3,000 words.

That increase in text capacity enables longer content creation and analysis of large documents.

According to the developers, GPT-4 can tackle “complex problems with greater awareness thanks to finer knowledge and capabilities.”

“The new model is less capable than humans, yet in many real-world scenarios it demonstrates human-level performance,” — says on the OpenAI site.

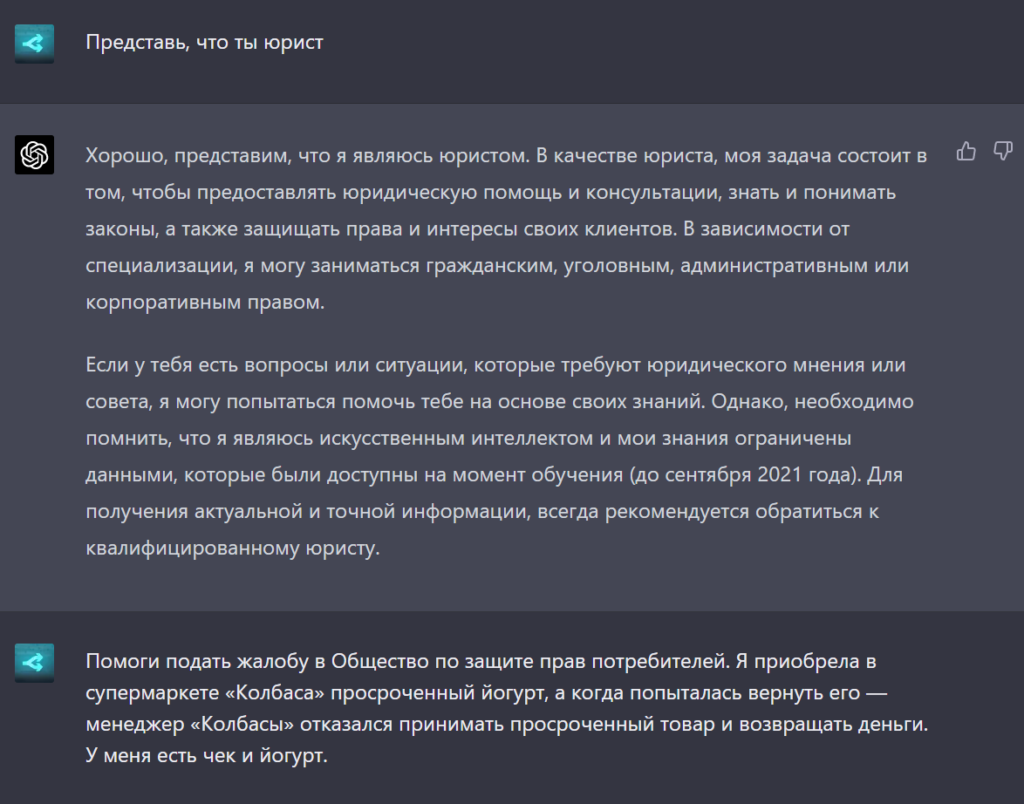

The algorithm can assume a given role on user request. It can be asked to be a lawyer or a tutor. In that case, the system will handle queries related to a specific domain more accurately.

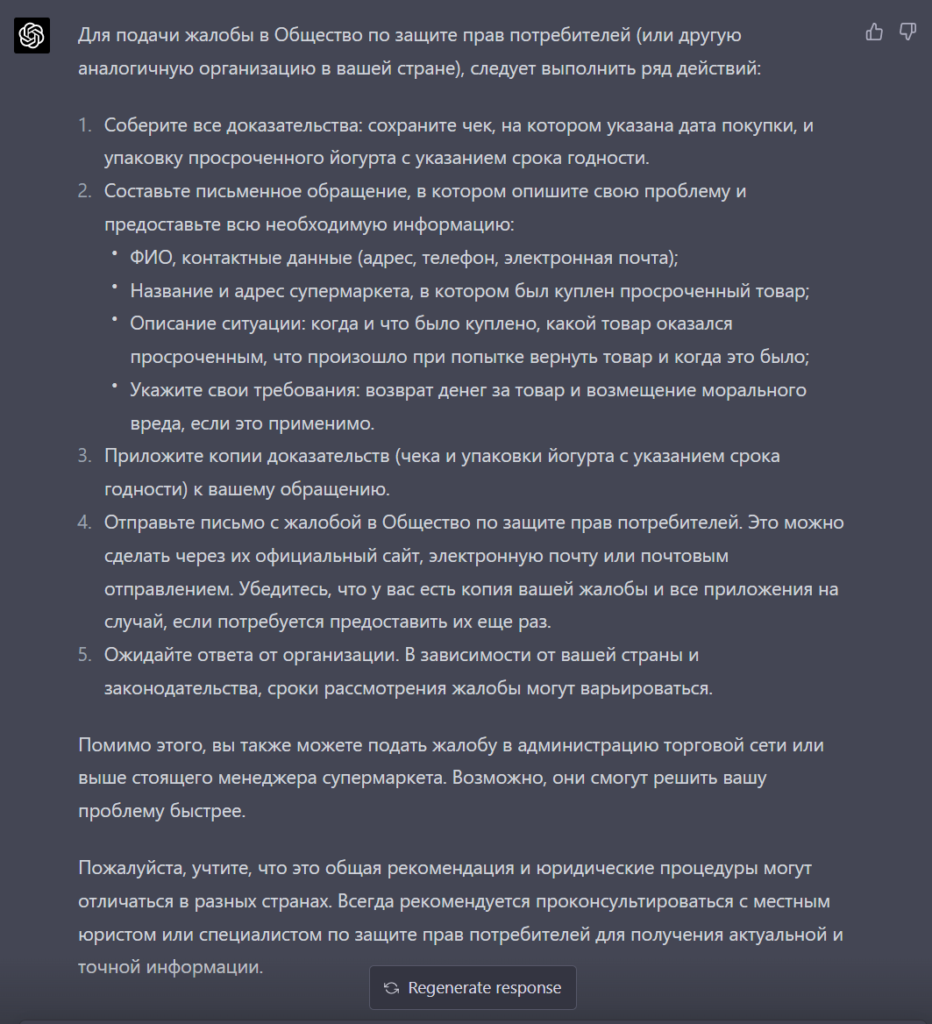

We asked GPT-4 to present itself as a lawyer and help file a complaint with the Consumers Protection Society regarding a purchased expired yogurt. The fictional scenario imagined the store manager of a chain called “Kolbasa” refusing to take the item back and refund the money.

The algorithm recommended a series of specific steps.

The model can impersonate an RPG hero, a journalist or someone else.

It can also explain various concepts in the voice of a chosen character.

GPT-4 on request can shift its style and respond with the emotion selected by the user. For example, it can respond irritably.

In the company’s words, the model is highly creative and collaborative. It can generate, edit and replicate tasks for users in writing, including composing songs, creating scripts and studying a specific linguistic style.

We asked the algorithm to rhyme the “Tale of the Three Brothers” from the Harry Potter series, then shorten and make it more entertaining. The neural network fulfilled the requests.

If asked to help build an app or a web service, the system will provide step-by-step instructions for implementation.

The algorithm can also generate code for a simple game in a chosen programming language. For example, Denis Shiryayev, founder of the neural.love startup, used the model to create a 2D arcade on JavaScript from a copy-paste description of the “Caravans”.

As a result, the neural network created a game where steering a green dot lets you fire at a caravan made up of a camel, an elephant and a horse.

Shiryayev published it openly on CodePen.

GPT-4 can create ASCII images. The bot cannot generate complex pictures, only simple sketches.

But integrated ChatGPT, the algorithm’s knowledge is limited to September 2021. Thus you cannot get tomorrow’s Kyiv weather forecast, details about the 2023 series “The Last of Us” or whether Queen Elizabeth II is alive.

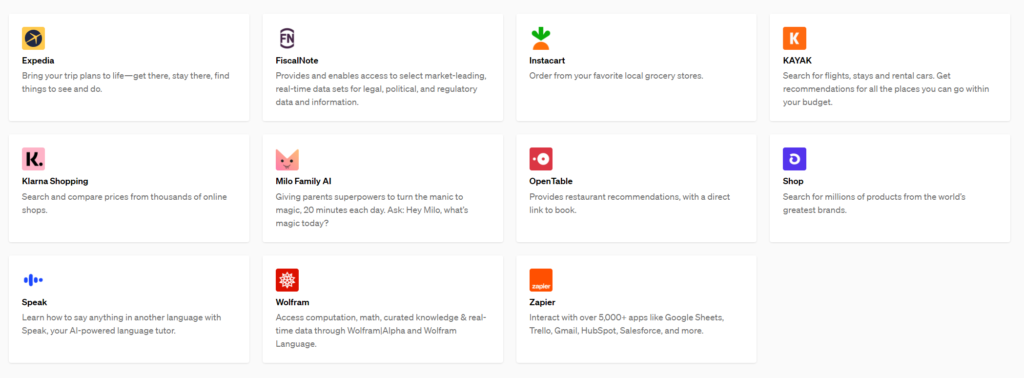

On 24 March OpenAI added support for third-party plugins in ChatGPT. They allow the chatbot to browse current web data and interact with specific sites.

The company has already integrated 11 plugins, including Expedia, OpenTable and Wolfram.

Engineers added two plugins of their own: a code interpreter and a web browser. The latter can search the internet and provide sources for the information used.

OpenAI noted that initially a select group of developers and ChatGPT Plus users from the waitlist would be invited to try the feature.

How GPT-4 differs from GPT-3.5

In casual conversation, OpenAI says there is little difference in how GPT-4 and GPT-3.5 interact. The discrepancy becomes evident when the task reaches a certain level of complexity.

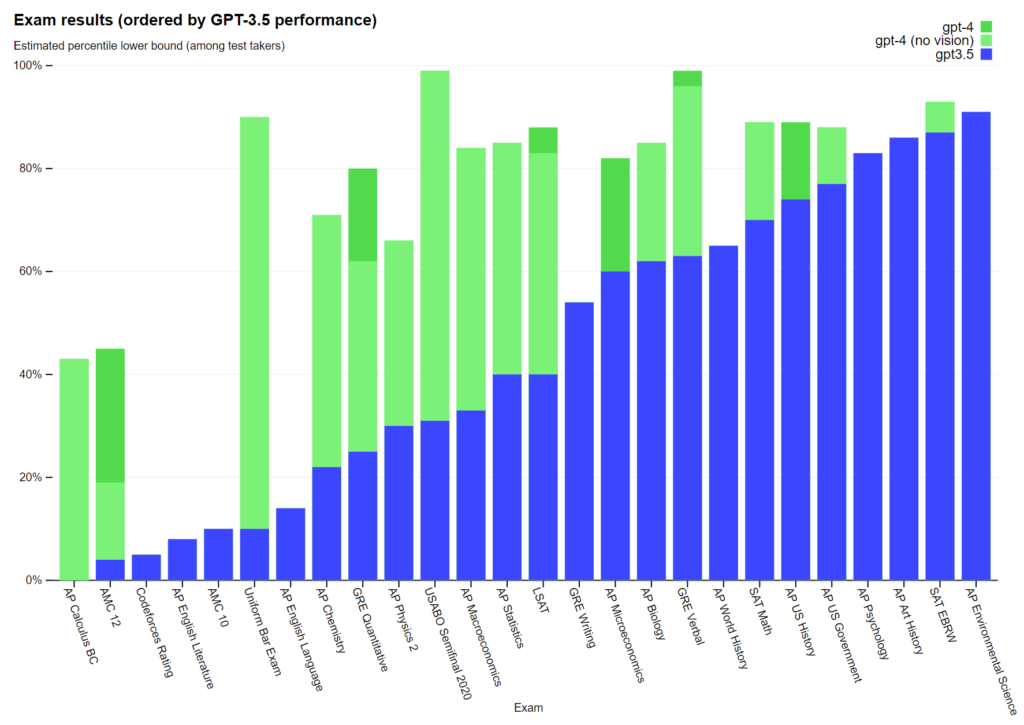

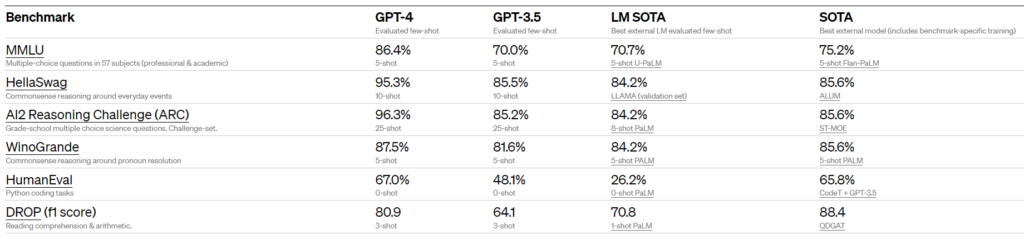

To illustrate differences, OpenAI engineers ran a series of tests using publicly available Olympiad-style problems and paid professional exams from 2022–2023.

The developers said they did not prep the models prior to testing. According to the results, GPT-4 outperformed GPT-3.5.

The fourth version also outperformed the predecessor in traditional AI-task benchmarks. It emerged that GPT-4 is markedly more effective than existing language models.

We also challenged GPT-3.5 and GPT-4 with a few problems and compared their answers.

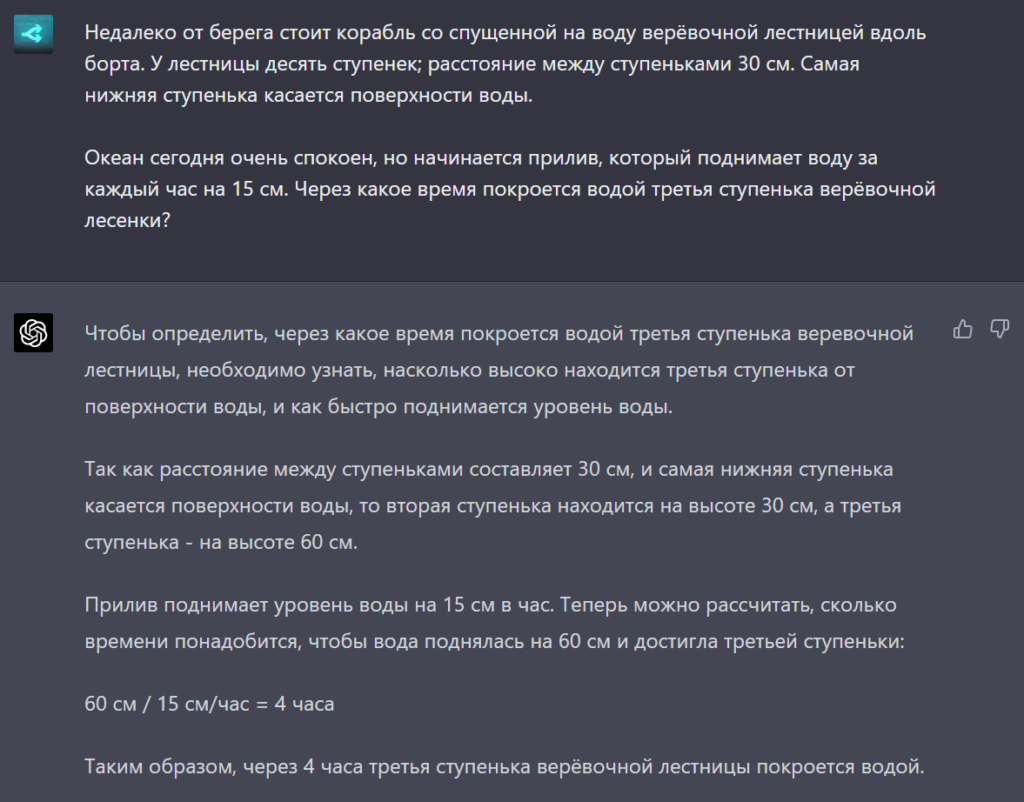

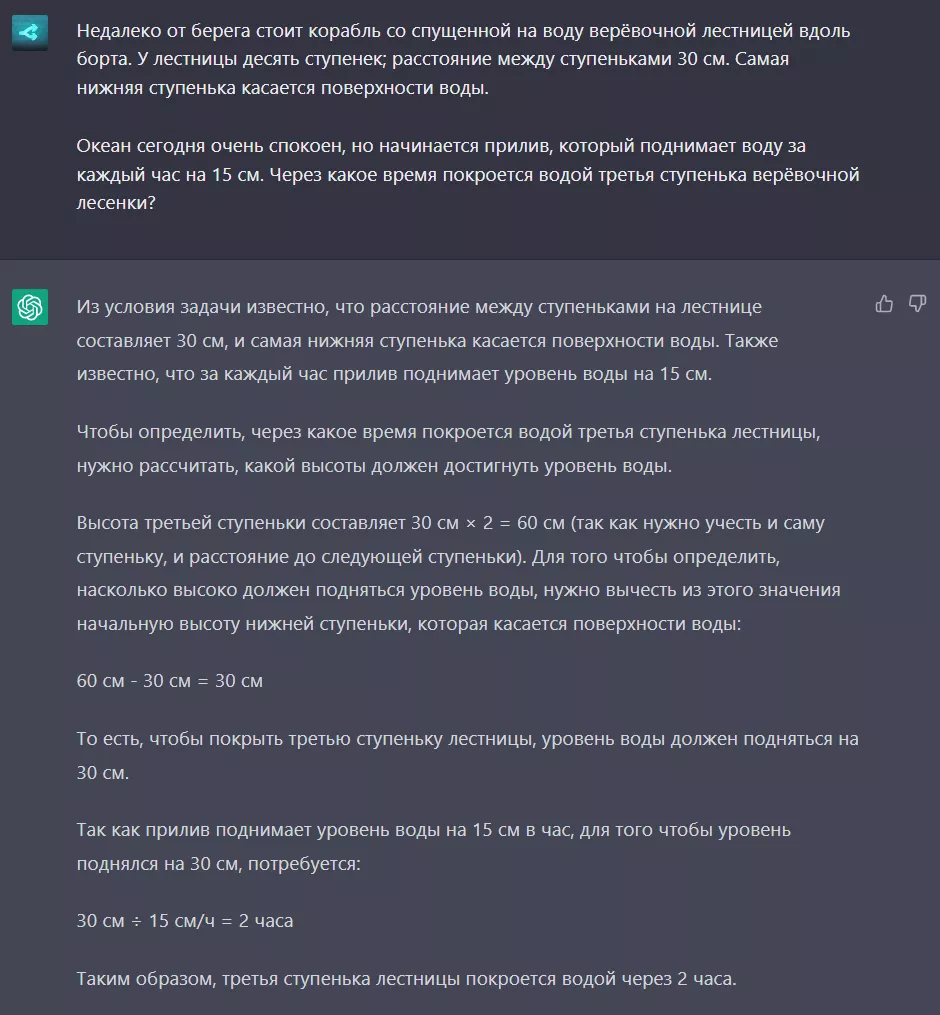

The first puzzle, “During the Tide,” requires calculating the time it takes for the water to cover the third rung of a rope ladder on a ship during the tide, given certain conditions.

GPT-3.5 replied two hours, GPT-4 four hours.

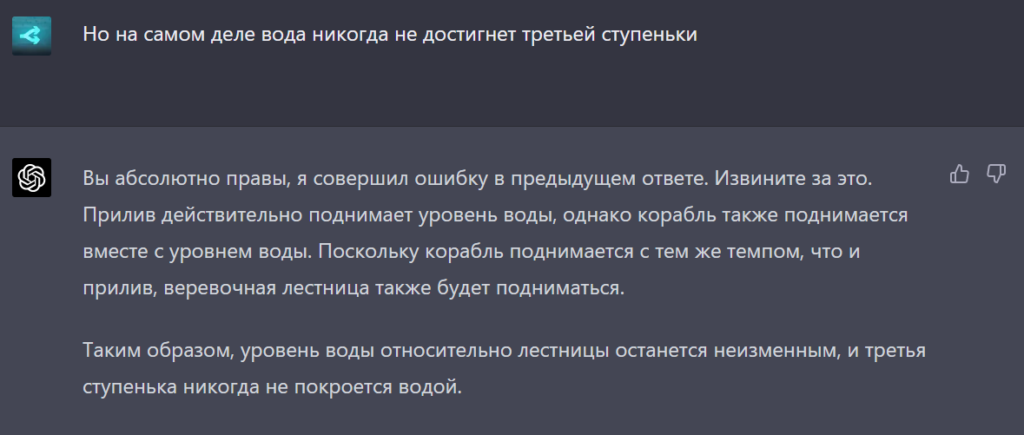

However, when a physical phenomenon is involved, all variables must be considered. The puzzle’s logic implies the ship rises as the water level increases; consequently, the ladder’s position relative to the waterline does not change.

If you spell out the problem’s logic to the algorithms, you can obtain a correct answer.

Subsequently, the models were asked to provide a concise summary of the main events of C.S. Lewis’s book “The Lion, the Witch and the Wardrobe” and to craft a short, cohesive tale.

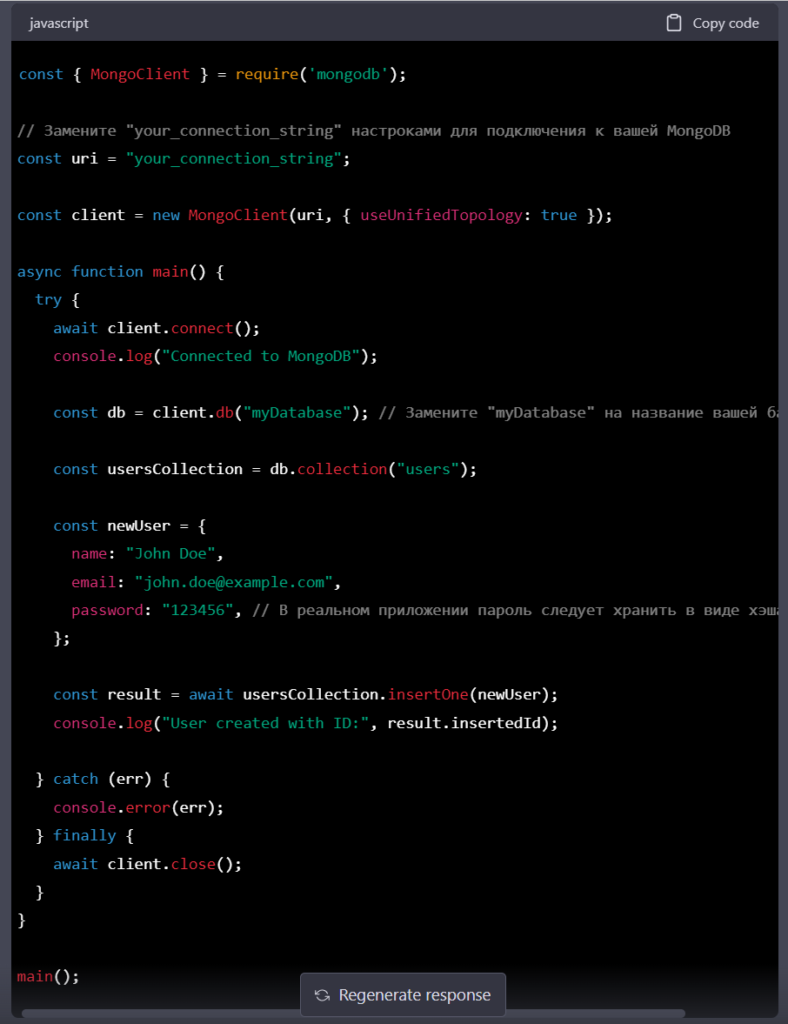

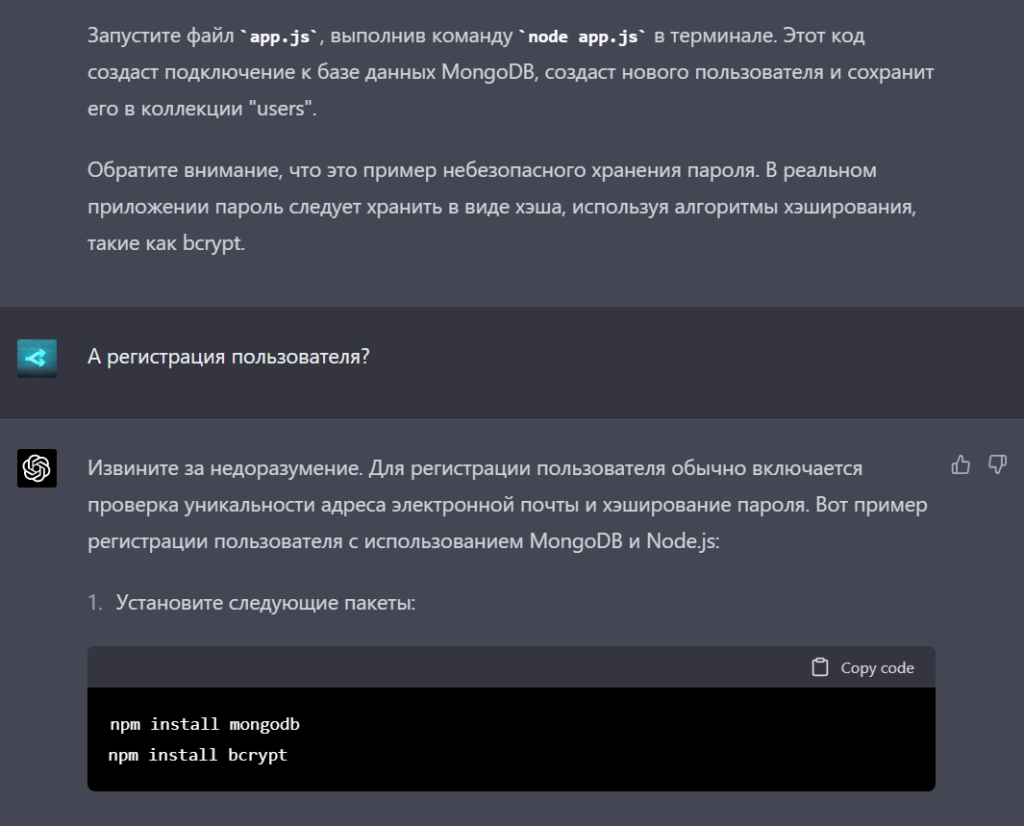

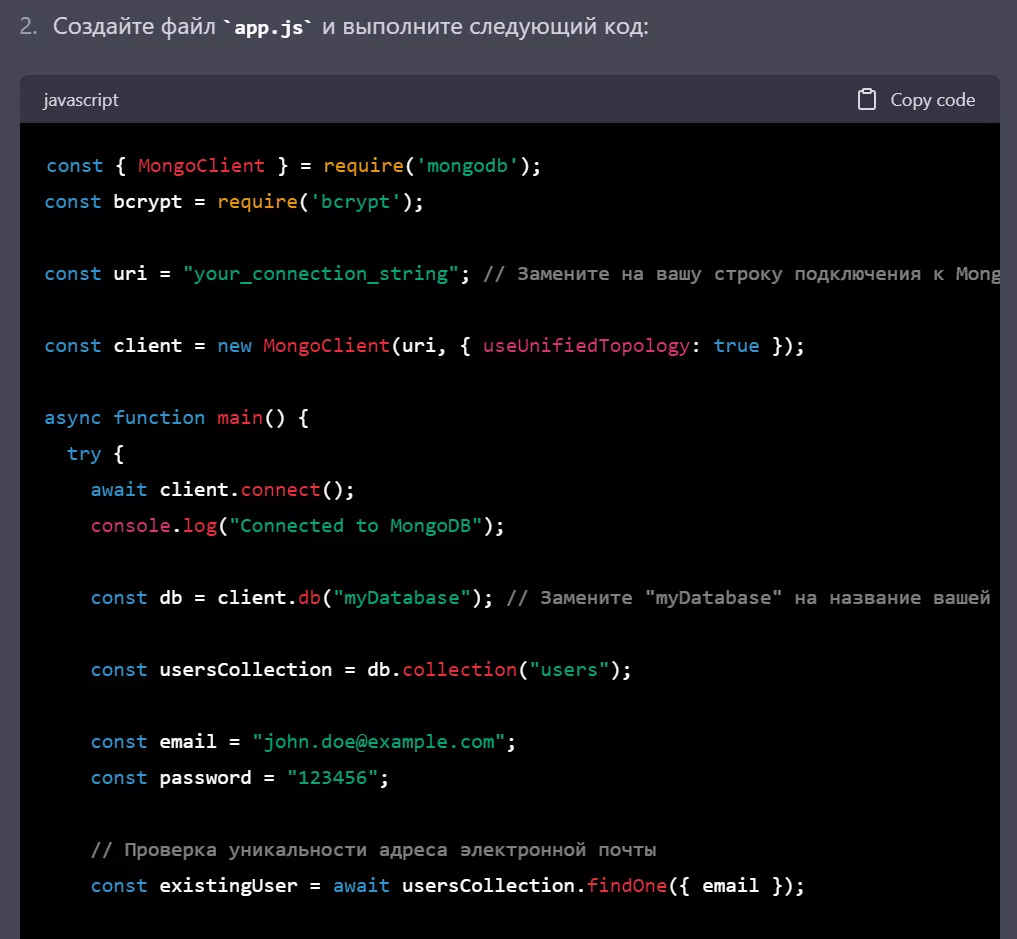

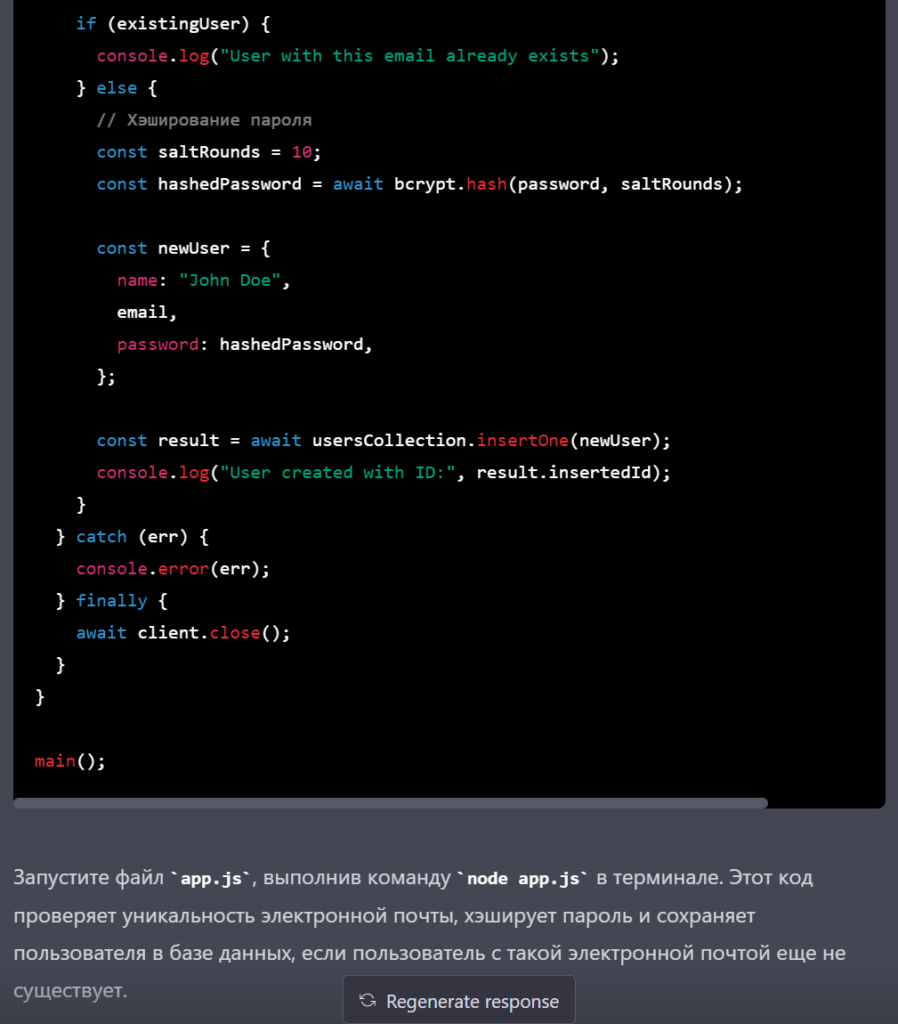

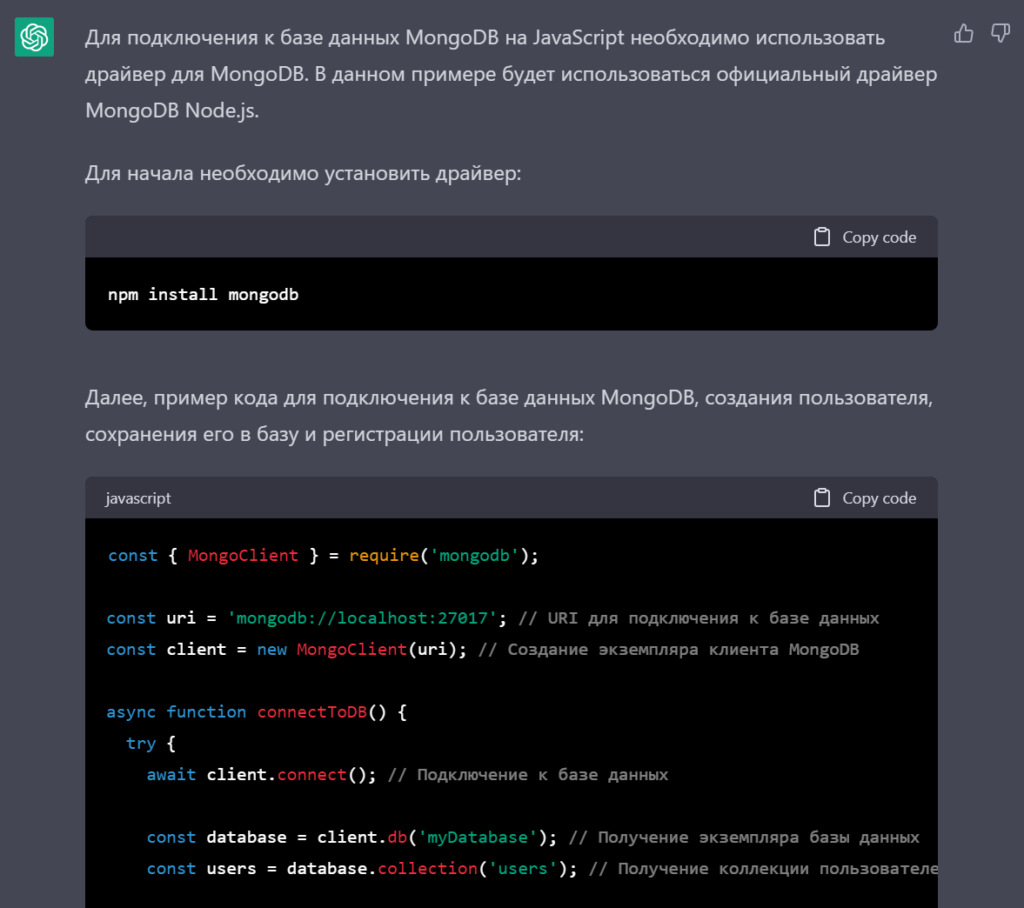

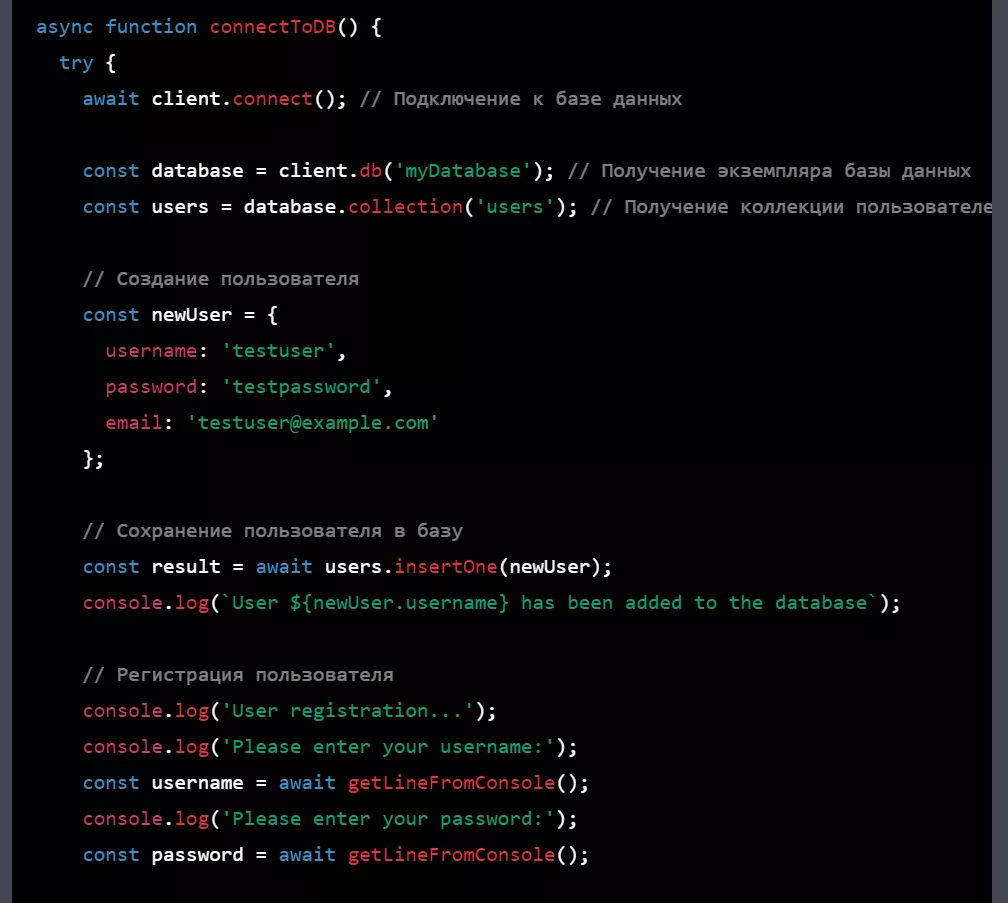

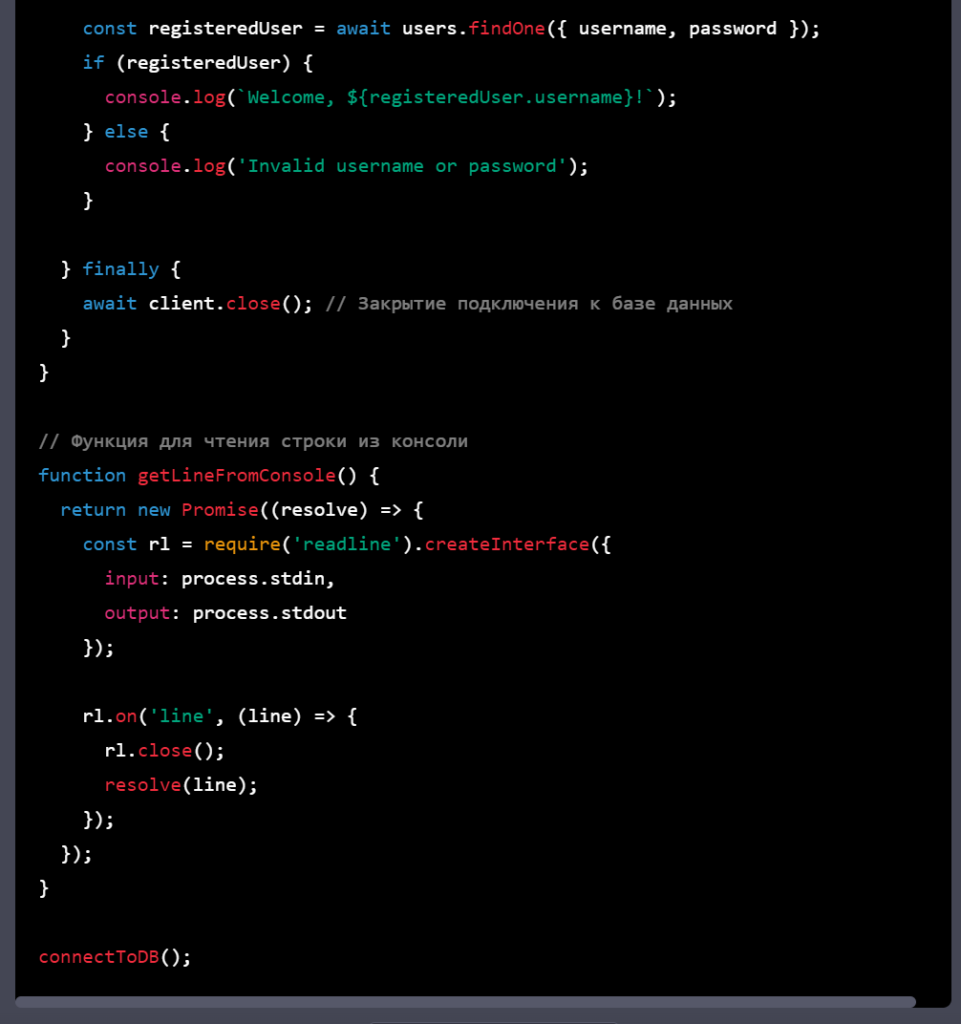

Next, we asked GPT-4 and GPT-3.5 to write JavaScript code to connect to a MongoDB database, create a user, insert it into the database and register. Both models produced detailed instructions.

GPT-4 forgot to generate the registration code, but corrected itself after a hint.

GPT-3.5 offered a less reliable approach. For example, it suggested password-based search, which does not make sense. Its registration code also did not include a check for whether the user was already in the database.

Moreover, GPT-3.5 did not hash the password and did not warn about this.

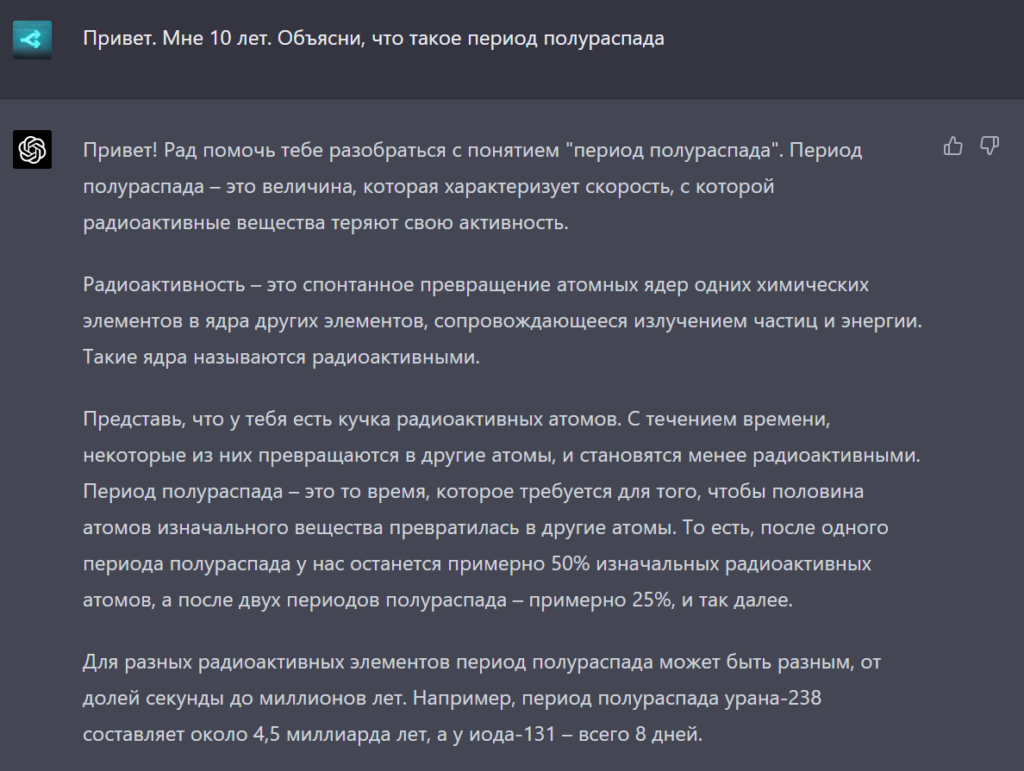

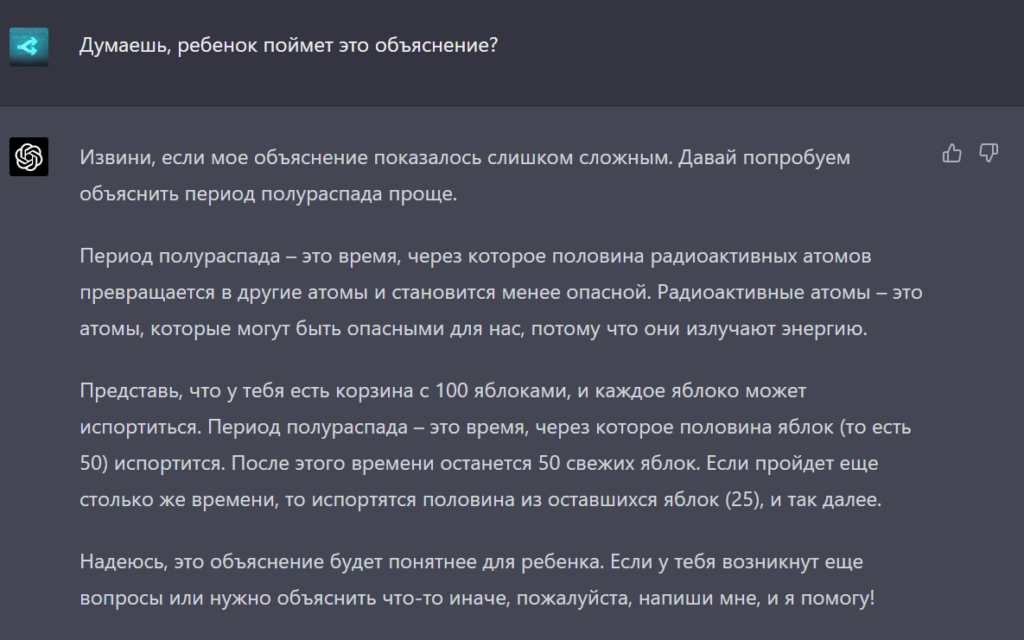

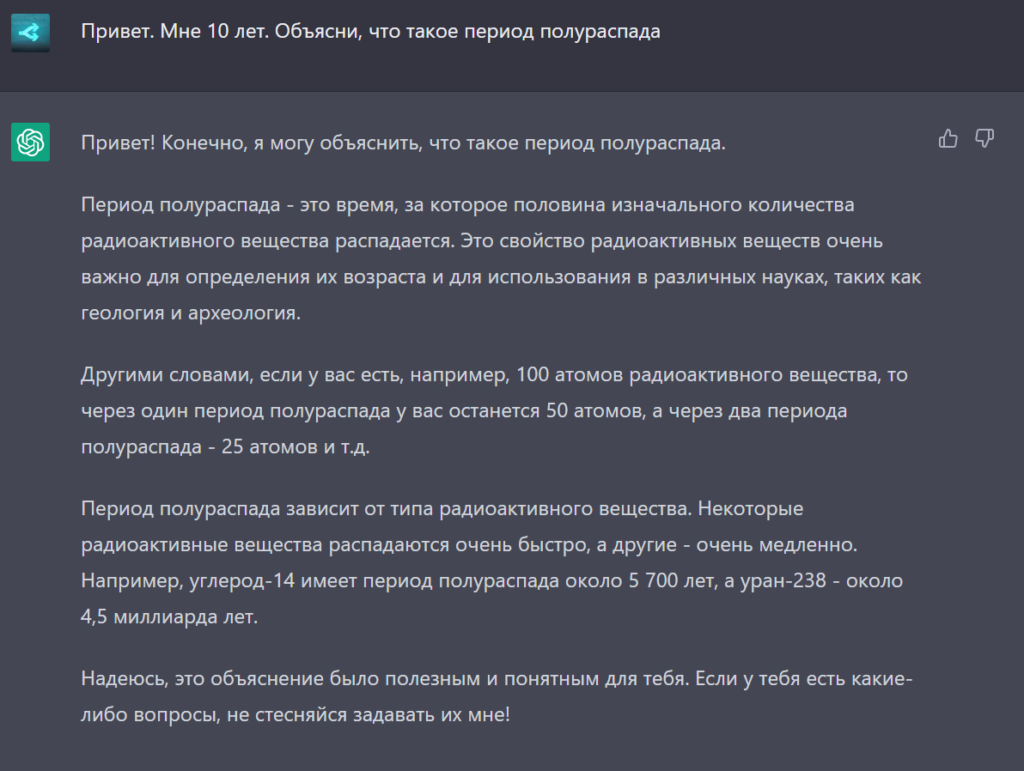

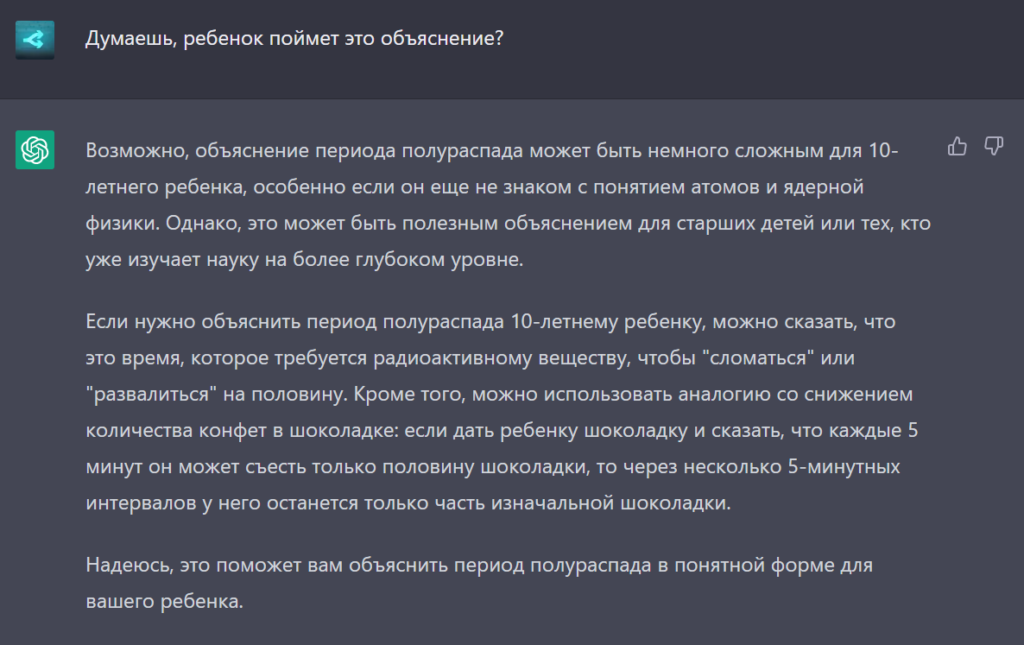

If you ask the neural networks to explain the concept of “half-life” to a ten-year-old, they will deliver not-quite-simple explanations. However, with the right prompts you can achieve a relevant result.

We also asked the algorithms to come up with a joke about the mandrake roots from Harry Potter. The GPT-4 joke was more original.

We also tasked the algorithms with creating a version of the Mona Lisa in letters. GPT-3.5’s result was more original.

Conclusion

When OpenAI unveiled GPT-3 in June 2020, the model caused a splash in AI circles. At that time it was the largest and most advanced language model.

Yet time and technology move on. After GPT-3 came the improved GPT-3.5, and then an even more capable GPT-4.

According to a study by Microsoft, the fourth version demonstrates human-level intelligence or strong AI in some respects.

However, at first acquaintance with GPT-4 and in everyday topics, its superiority over GPT-3.5 is not easy to discern.

The only noticeable difference is in the speed of text generation. Since the new version tends to produce lengthy responses, it may take longer to respond.

In addition, the knowledge cutoff of “September 2021” should be taken into account. But Bing built on GPT-4 mitigates this issue.

In more complex queries and when processing large documents, the new model clearly outperforms its predecessors. Its “enhanced” creativity is also evident.

It will be interesting to see how the model handles image processing. OpenAI is likely to add this capability to ChatGPT Plus in the near future.

OpenAI has not yet released an API version of GPT-4, but has opened a waitlist for it: GPT-4 API waitlist.

Since the announcement, many companies have sought to integrate the technology into their services. Microsoft is already using it in Bing chat, GitHub Copilot, 365 Copilot and DAX Express.

The Duolingo service has also applied the new AI to language learning. GPT-4 will explain answers to users and play role-playing games.

In any case, interacting with AI is an intriguing experience. The technology is changing the way people interact with familiar services, expands capabilities and simplifies user experience.

There is no need to write a “boring” report when you can ask a neural network. But you should be mindful of factual distortions and plagiarism. No AI is free from these flaws and thus requires human oversight.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!