Nvidia unveils new chips and AI services

Nvidia at the GTC 2022 conference unveiled processors for AI workloads, as well as a range of services for developers.

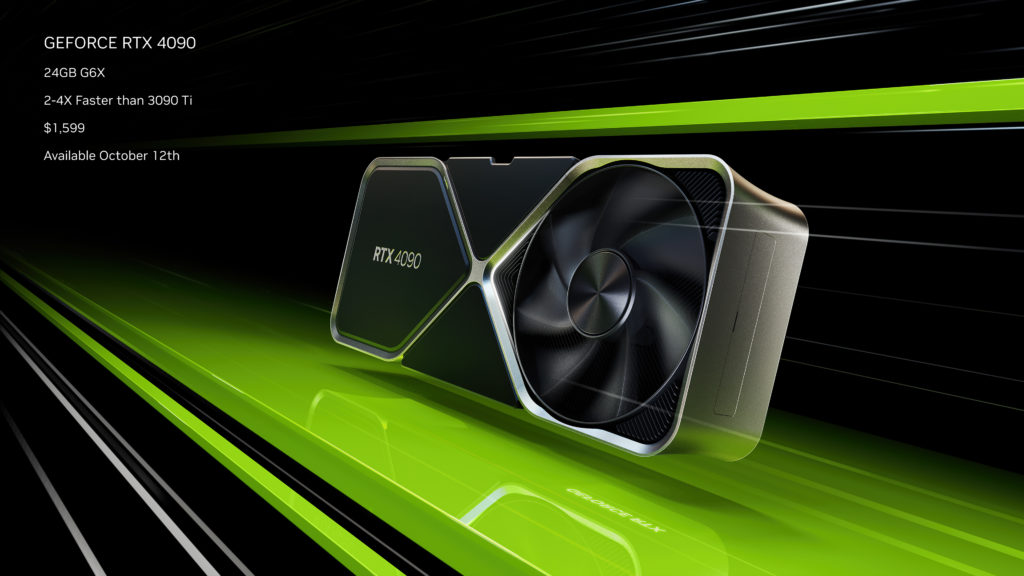

CEO of the tech giant Jensen Huang demonstrated the new generation of GeForce RTX 40 Series graphics cards. The accelerators are built on the Ada Lovelace architecture and are presented in two models: RTX 4090 and RTX 4080 in two memory configurations.

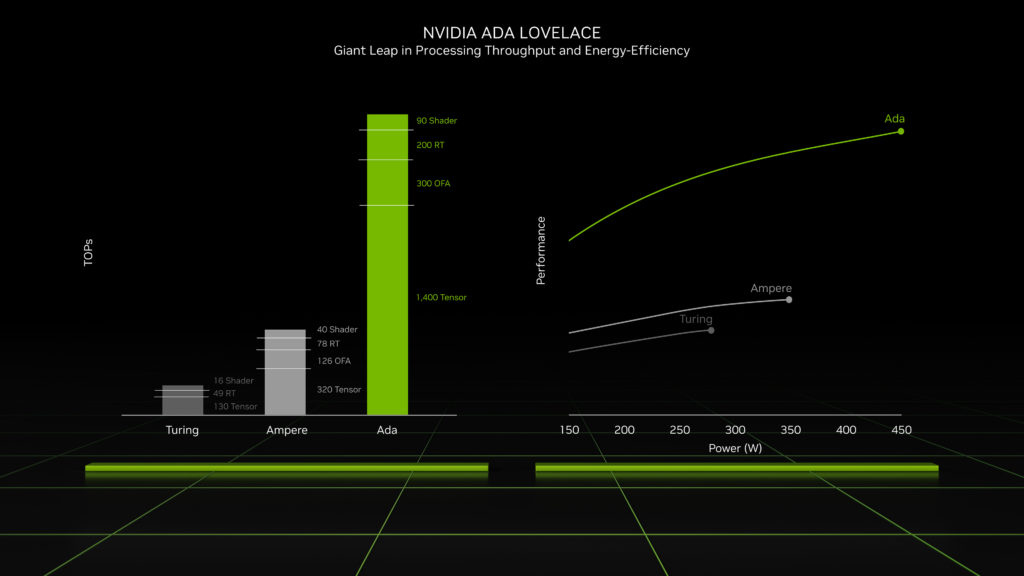

The chips are produced on a 4-nm process and contain up to 76 billion transistors. The key features of the new architecture are the third-generation RT cores and the fourth-generation Tensor cores.

GeForce RTX 4090 graphics card is equipped with 24 GB of GDDR6X memory. According to Huang, the accelerator contains 16,384 CUDA cores and is four times faster than the RTX 3090.

The new model will go on sale on October 12, starting at $1,599.

GeForce RTX 4080 16 GB has 9,728 CUDA cores. The 12 GB edition is equipped with 7,680 CUDA cores.

Both graphics cards will go on sale in November at prices of $1,199 and $899 respectively.

Huang announced the new version of the AI-powered game upscaler DLSS 3. According to him, in some projects the algorithm increases FPS by four times without loss of quality and smoothness.

DLSS 3 will be available only on the GeForce RTX 40 Series.

Huang also announced the start of mass production of Nvidia H100 server chips. He said the new accelerators are 3.5 times more efficient than their predecessor. This helps reduce the total cost of ownership of servers and shrink the number of nodes while maintaining AI performance.

The company chief announced a Drive Thor system-on-a-chip (SoC) for autonomous vehicles. According to the statement, the platform will provide performance of 2,000 teraflops.

The first vehicles powered by the Drive Thor SoC will not appear before 2025.

Nvidia announced a service for creating large language models. NeMo LLM is designed for text generation and summarization, and BioNeMo LLM for predicting protein structure, drug development, and other medical research.

Also the company introduced its Omniverse Cloud Services cloud platform for building and operating AI and metaverse applications. The service includes:

- Omniverse Nucleus Cloud — platform for creating 3D objects and scenes;

- Omniverse App Streaming — application streaming service that uses RTX accelerators;

- Omniverse Replicator — synthetic data generator;

- Omniverse Farm — platform for running multiple cloud compute instances;

- NVIDIA Isaac Sim — robotics simulation and synthetic data generation;

- NVIDIA DRIVE Sim — simulation platform for running autonomous vehicle simulations.

Some cloud platform services are already available to users.

In March Nvidia unveiled the new generation of server AI chips H100 and Grace CPU Superchip.

In April the tech giant disclosed the use of artificial intelligence in the development of GPUs.

In September the US government restricted the export of Nvidia H100 chips to Russia and China.

Subscribe to ForkLog News on Telegram: ForkLog AI — all the news from the world of AI!

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!