Nvidia Unveils New Technologies at GTC 2024

Nvidia has announced a new generation of AI chips and software designed for large language models. The presentation took place at the GTC 2024 conference.

According to the company’s CEO, Jensen Huang, Nvidia updates its GPU architecture every two years to enhance performance. Many previously released AI models have been trained on the Hopper architecture, which is used in chips like the H100, announced in 2022.

“Hopper is fantastic, but we need more powerful GPUs,” stated the company’s CEO.

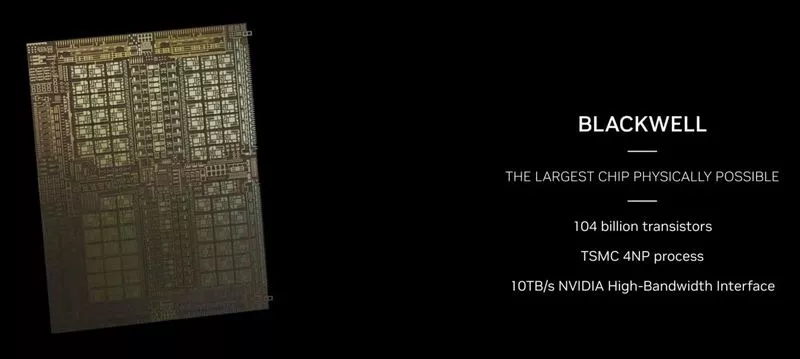

The new generation of AI graphics processors is named Blackwell. The first model, GB200, will be released later this year.

Nvidia claims the new processors offer a significant performance boost for companies working with neural networks—20 petaflops with the new chips compared to 4 petaflops with the H100. According to Huang, the additional computational power will enable them to train larger and more complex models.

For instance, training GPT-4 in 90 days required 8,000 old chips and 15 MW of power. Training on the new graphics card requires only 2,000 processors and 4 MW.

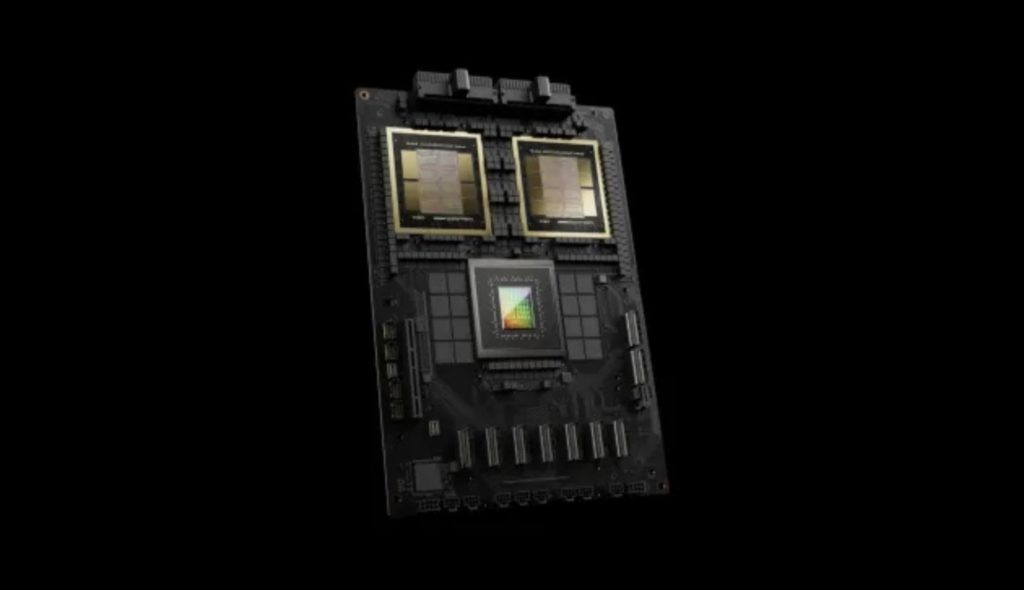

It was also revealed that the GB200 will have 192 GB of memory with a bandwidth of 8 TB/s.

“Blackwell is not just a chip, it’s a whole platform,” noted Huang.

The graphics processor combines two separately manufactured dies into a single chip, produced by TSMC. Together, they contain 208 billion transistors.

The model will be available as a server called GB200 NVLink 2, consisting of 72 Blackwell graphics processors. Its total RAM will be 30 TB.

The company will sell access to the GB200 through cloud services. Nvidia announced that Amazon Web Services will build a server cluster consisting of 20,000 of these models.

Huang also announced the addition of a new product called Nvidia Inference Microservice (NIM) to the enterprise software subscription. It simplifies the use of older graphics processors for computations.

Back in January 2024, Mark Zuckerberg promised to purchase Nvidia chips for Meta, as the “future roadmap” in artificial intelligence requires a “vast computational infrastructure.”

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!