OpenAI trains language model to solve elementary math problems

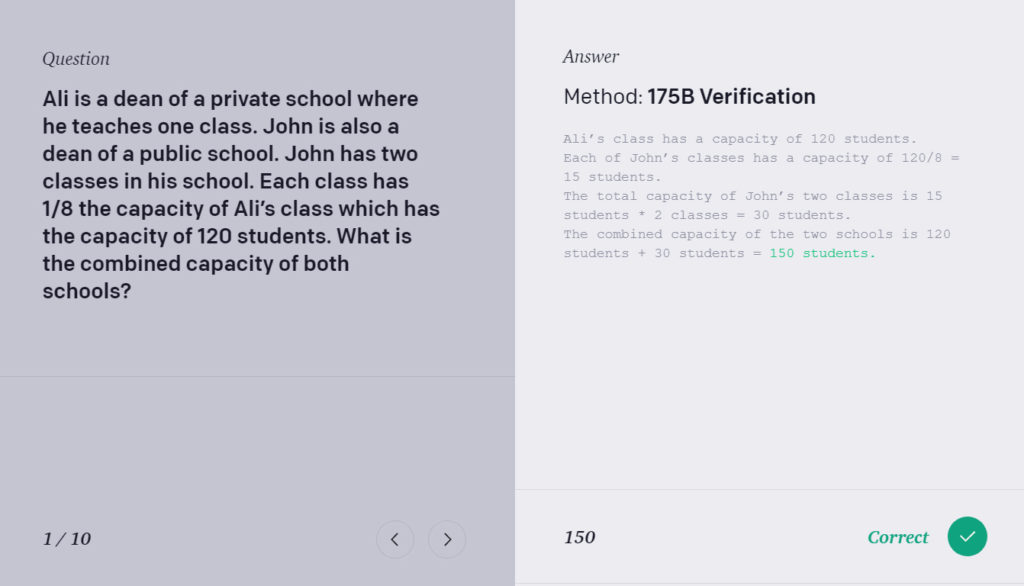

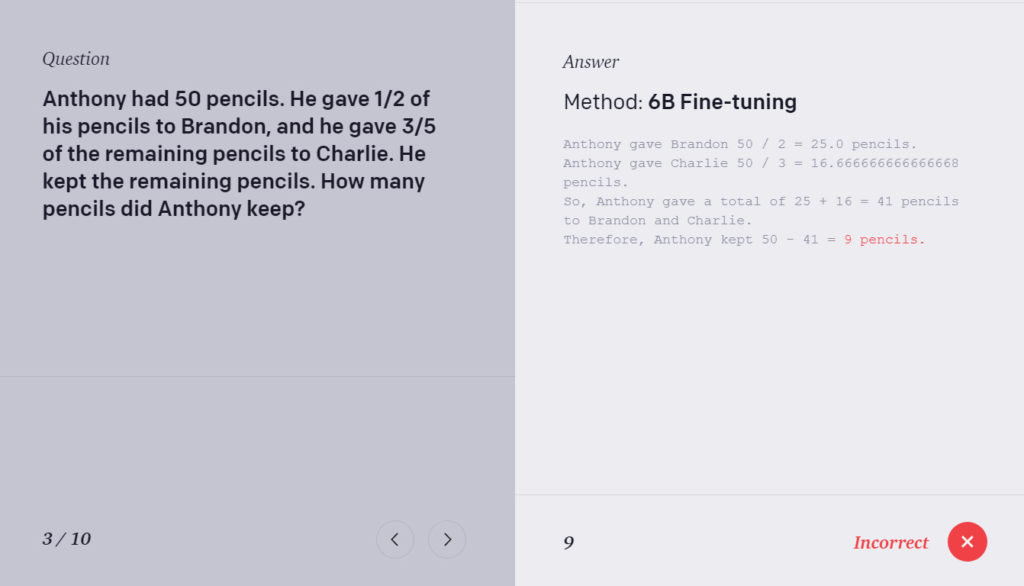

OpenAI researchers have trained an artificial intelligence system to solve basic grade-school math problems presented in text form.

We’ve trained a system to answer grade-school math problems with double the accuracy of a fine-tuned GPT-3 model.

Multistep reasoning is difficult for today’s language models. We present a new technique to help. https://t.co/JRXUYZOSg7

— OpenAI (@OpenAI) October 29, 2021

The accuracy of the algorithm reached 55% — twice as high as a fine-tuned GPT-3 model. For comparison: in the same test, schoolchildren aged 9 to 12 score 60%.

According to the developers, the achieved result is very important:

“Modern AI is still quite weak at multi-step reasoning, which is easily mastered even by schoolchildren.”

Researchers achieved these results by training the model to recognise its mistakes and keep searching for the correct answer until it finds a working solution.

The researchers also introduced the GSM8K dataset assembled for training the algorithm. It comprises 8,500 high-quality verbal math problems for elementary school. Solving them requires two to eight steps, each involving elementary arithmetic calculations.

The GSM8K dataset is available to all comers on GitHub.

In October, OpenAI developed a model for generating short excerpts from fiction.

In August, the company introduced the AI tool Codex for automatically writing computer code.

In July, OpenAI released a Python-like programming language Triton for developing neural networks.

Subscribe to ForkLog News on Telegram: ForkLog AI — all the news from the world of AI!

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!