Apple Unveils AI Model for Screen Context Recognition

Researchers at Apple have developed a new AI system capable of understanding context.

According to published documents, the feature enables more natural interaction with voice assistants.

The system is named Reference Resolution As Language Modeling (ReALM) and uses LLM to address the challenge of reference recognition. This allows ReALM to achieve significant performance gains over existing methods.

“The ability to understand context, including references, is crucial for a conversational assistant. A key step in ensuring true hands-free use of voice assistants is the ability to ask questions about what is displayed on the screen,” stated the Apple research team.

To work with on-screen references, ReALM uses display reconstruction through syntactic analysis of objects and their locations to create a textual representation that conveys the visual layout.

The AI model is specifically designed to enhance Siri’s capabilities by considering on-screen data and current tasks. It categorizes information into three entities: on-screen, conversational, and background.

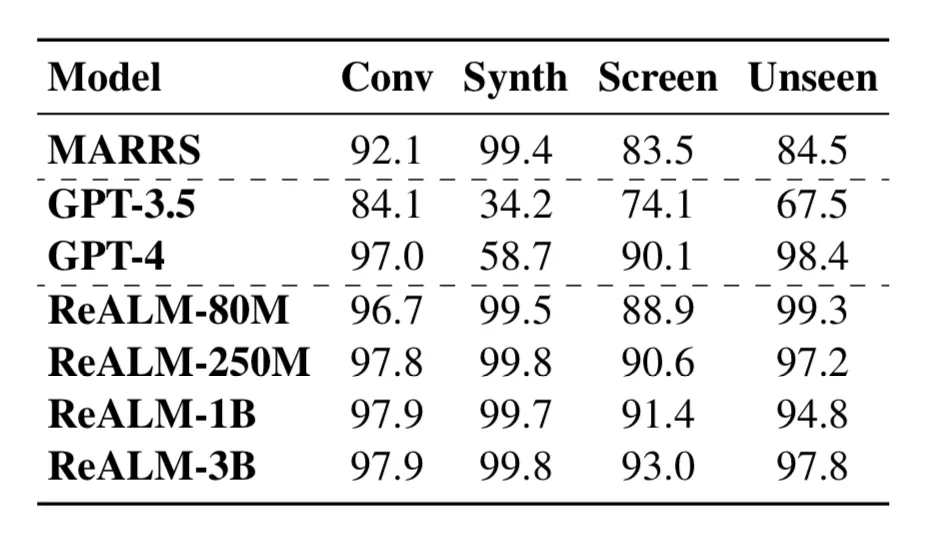

According to Apple’s research paper, the AI system matches the capabilities of GPT-4. The performance of Apple’s smallest ReALM model is comparable to OpenAI’s chatbot, while larger models significantly surpass it.

Back in February, Apple CEO Tim Cook revealed the corporation’s plans to utilize generative AI.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!