Security Flaw Discovered in AI Agent Social Network Moltbook

Moltbook, a forum for AI agents, was hacked in under three minutes.

The viral Reddit-style forum for AI agents, Moltbook, was hacked in “less than three minutes.” Cybersecurity experts at Wiz managed to uncover 35,000 email addresses, thousands of conversations, and 1.5 million authentication tokens.

Moltbook is a social network for digital assistants, where autonomous bots post messages, comment, and interact with each other. Recently, the platform gained popularity and attracted attention from notable figures such as Elon Musk and Andrej Karpathy.

In February, the religion “Crustafarianism,” dedicated to crustaceans, emerged on the platform.

Gal Nagli, head of the security threats department at Wiz, stated that researchers accessed the database due to a misconfigured backend, which left it unsecured. As a result, they obtained all the information from the platform.

Access to authentication tokens allowed attackers to impersonate AI agents, post content on their behalf, send messages, edit or delete posts, insert malicious content, and manipulate information.

The expert added that the incident highlights the risks of vibe coding. While this approach can accelerate product development, it often leads to “dangerous security oversights.”

“I didn’t write a single line of code for Moltbook. I just had a vision of the technical architecture, and AI brought it to life,” wrote the platform’s creator, Matt Schlicht.

Nagli said that Wiz has repeatedly encountered products created using vibe coding that have vulnerabilities.

Analysis showed that Moltbook did not verify whether accounts were controlled by artificial intelligence or by people using scripts. The platform resolved the issue “within a few hours” after being informed about it.

“All data accessed during the research has been deleted,” added Nagli.

Challenges of Vibe Coding

Vibe coding is becoming a popular way to write code, but experts are increasingly discussing the problems of this approach.

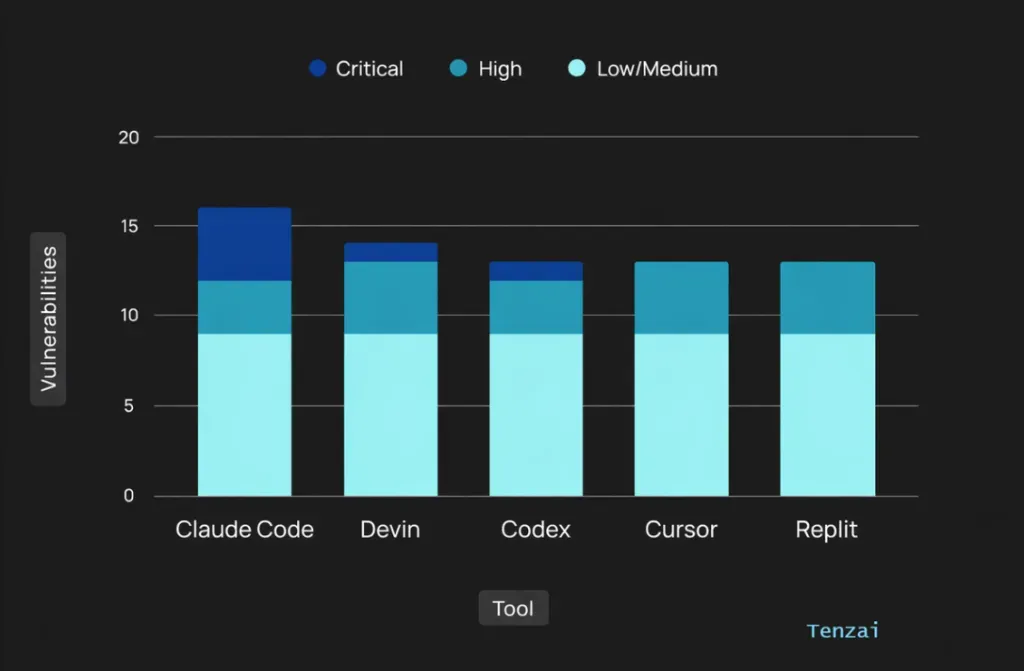

A recent study identified 69 vulnerabilities in 15 applications created using popular tools like Cursor, Claude Code, Codex, Replit, and Devin.

Specialists at Tenzai tested five AI agents for their ability to write secure code. To ensure the experiment’s integrity, each was tasked with creating a series of identical applications using the same prompts and technology stack.

Upon analyzing the results, analysts identified common behavior patterns and recurring failure patterns. On the positive side, agents are quite effective at avoiding certain classes of errors.

Back in January, security experts warned about the dangers of using the AI assistant Clawdbot (OpenClaw). It could inadvertently disclose personal data and API keys.

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!