What is a deepfake?

Key points

- A deepfake is a method of creating photos and videos with deep-learning algorithms. The technology allows a person’s face in existing media to be replaced with someone else’s.

- At least 85,000 AI-made forgeries have been found online. Experts say the tally doubles every six months.

- Beyond disinformation, intimidation and harassment, deepfakes are used in entertainment, synthetic-data generation and voice restoration.

What is a deepfake?

A deepfake is a technology for synthesising media in which a face in an existing photo or video is replaced with another person’s face. The forgeries are created using artificial intelligence, machine learning and neural networks.

The term combines “deep learning” and “fake”.

Why are deepfakes made?

Many deepfakes are pornographic. By late 2020, Sensity had found 85,000 forgeries online created with AI methods. Some 93% were pornographic, the vast majority featuring the faces of famous women.

New methods let unskilled users make deepfakes from just a few photos. Experts say this content doubles every six months. Fake videos are likely to spread beyond celebrities to fuel revenge porn.

Deepfakes are also used for information attacks, parody and satire.

In 2018 American director Jordan Peele and BuzzFeed published a purported address by former US president Barack Obama in which he called Donald Trump “an asshole”. The clip was made with FaceApp and Adobe After Effects. The director and journalists wanted to show how fake news might look.

In 2022, after Russia’s full-scale invasion of Ukraine, a fake video of president Volodymyr Zelenskyy circulated on social media in which he “calls on the people to surrender”. Users quickly spotted the forgery, and Zelenskyy recorded a rebuttal.

In May of the same year, scammers spread a deepfake of Elon Musk in which he “urges people to invest” in an obvious scam. The YouTube channel that posted it had over 100,000 subscribers, and before the account was deleted the video had more than 90,000 views. How many people fell for the scam is unknown.

For fun, there are many deepfake apps. In early 2020, Reface became widely known for using deepfake technology to create short videos that overlay virtually any face onto a wide range of videos and GIFs.

What can be faked?

Deepfake technology can produce not only convincing videos but entirely fabricated photos from scratch. In 2019 a certain Maisy Kinsley set up LinkedIn and Twitter profiles claiming to be a Bloomberg journalist. The “journalist” contacted Tesla employees to fish for information.

It later emerged this was a deepfake. The social profiles contained no convincing evidence linking her to the publication, and the profile photo was clearly generated by AI.

In 2021 ForkLog HUB ran an experiment, creating a virtual character, N. G. Adamchuk, who “wrote” musings about the cryptocurrency market for the platform. The texts were generated with the GPT-2 large language model. The avatar was created with This Person Does not Exist.

Audio can also be forged to create “voice clones” of public figures. In January 2020, fraudsters in the UAE faked the voice of a company executive and convinced a bank employee to transfer $35m to their accounts.

A similar case occurred in 2019 with a British energy company. Scammers stole about $243,000 by impersonating the firm’s chief executive with a fake voice.

How are deepfakes made?

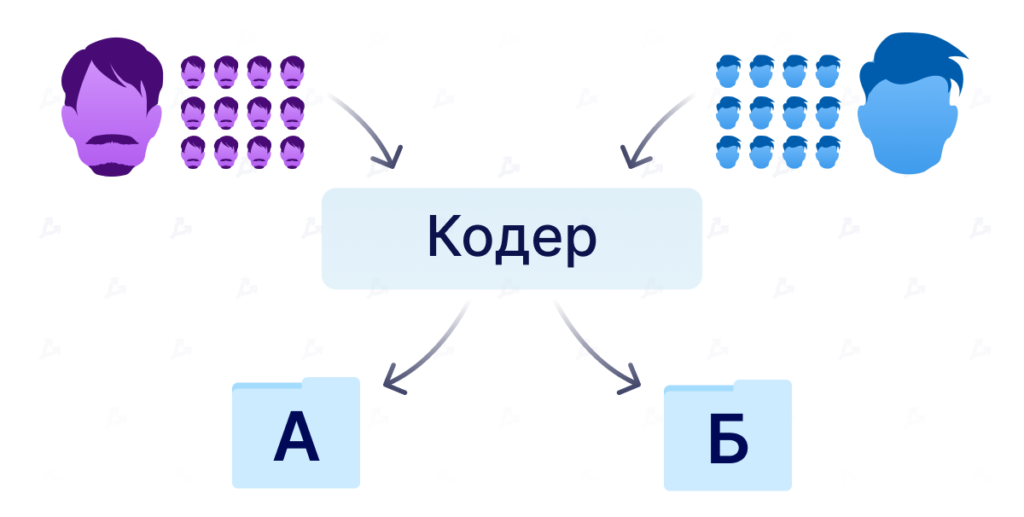

Creating deepfakes requires a large dataset of two people’s faces. An AI encoder analyses the dataset, finds similarities and compresses the images.

The decoder is then trained to reconstruct faces from the compressed frames. A separate algorithm is used for each person. To swap faces, the compressed data are fed to the “wrong” decoder.

For example, images of person A are fed into a decoder trained on person B. The algorithm reconstructs B’s face with A’s facial expression.

For high-quality results in video, the algorithm must process every frame this way.

Another way to create deepfakes is with generative adversarial networks (GANs). This approach is used by services like This Person Does not Exist.

Who makes deepfakes?

Deepfakes are created by academic and commercial researchers, machine-learning engineers, hobbyists, visual-effects studios and directors. Thanks to popular apps like Reface or FaceApp, anyone with a smartphone can make a fake photo or video.

Governments can use the technology too, for instance as part of online strategies to discredit and undermine extremist groups or to contact target individuals.

In 2019 journalists found a LinkedIn profile for a certain Katie Jones that turned out to be a deepfake. America’s National Counterintelligence and Security Center said foreign spies regularly use fake social-media profiles to monitor US targets. The agency accused China of conducting “mass” espionage via LinkedIn.

In 2022 South Korean engineers created a deepfake of presidential candidate Yoon Suk-yeol to attract young voters ahead of the 9 March 2022 election.

What technologies are used to make deepfakes?

Most forgeries are created on high-performance desktop computers with powerful graphics cards or in the cloud. Experience is also required, not least to polish finished videos and remove visual defects.

However, many tools now help people make deepfakes both in the cloud and on smartphones. In addition to Reface and FaceApp, there is Zao, which overlays users’ faces onto film and TV characters.

How to spot a deepfake?

Most fake photos and videos are low quality. Telltales include unblinking eyes, poor lip–speech synchronisation and blotchy skin tones. Along the edges of transplanted features there may be flicker and pixelation. Fine details such as hair are especially hard to render well.

Poorly transferred jewellery and teeth can also betray a fake. Watch for inconsistent lighting and reflections on the iris.

Big tech firms are fighting deepfakes. In April 2022 Adobe, Microsoft, Intel, Twitter, Sony, Nikon, the BBC and ARM formed the C2PA alliance to detect fake photos and videos online.

In 2020, ahead of the US elections, Facebook banned deepfake videos that could mislead users.

In May 2022 Google restricted the training of deepfake models in its Colab cloud environment.

What are the risks of deepfakes?

Beyond disinformation, harassment, intimidation and humiliation, deepfakes can undermine public trust in specific events.

According to Lillian Edwards, a professor and internet-law expert at Newcastle University, the problem is less the fakes themselves than the denial of real facts.

As the technology spreads, deepfakes may threaten justice, where falsifiable events can be passed off as real.

They also pose risks to personal security. Deepfakes can already imitate biometric data and fool face-, voice- or gait-recognition systems.

How can deepfakes be useful?

Despite the risks, deepfakes can be useful. The technology is widely used for entertainment. For example, London startup Flawless developed AI to synchronise actors’ lips with audio tracks when dubbing films into other languages.

In July 2021 the makers of a documentary about Anthony Bourdain, who died in 2018, used a deepfake to voice his quotes.

The technology can also help people regain their voice lost to illness.

Deepfakes are also used to create synthetic datasets, sparing engineers from collecting photos of real people and obtaining permissions for their use.

Subscribe to ForkLog on Telegram: ForkLog AI — all the news from the world of AI!

Further reading

What is artificial intelligence?

What is computer vision? (machine learning)

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!